Been quietly tracking how infrastructure actually evolves and Walrus keeps standing out for the right reasons. Not loud. Not rushed. Just steady progress where it counts. This is a grounded look at what’s changing, what feels intentional, and why the direction makes sense if decentralized storage is supposed to be usable, not just theoretical. Context drop once and done: @Walrus 🦭/acc $WAL #walrus .

One update that matters for real users is the Tusky migration situation. Older storage paths are being phased out and users are expected to export existing blobs and move data to supported endpoints. What stands out is how this was handled. No panic messaging. No pressure tactics. Clear tooling guidance and partner coordination give builders time to move data properly instead of scrambling at the last minute. Anyone who stored important files through earlier Tusky routes should already be planning exports and validating data integrity. This is basic ops hygiene and the way this was communicated shows an understanding of how real users operate.

Looking at network readiness and funding explains a lot about the current pace. The project secured serious backing earlier and that capital is now visible in how things are rolling out. Operator onboarding, ecosystem partnerships, and mainnet preparation are happening together rather than one after another. That matters because storage networks break when either demand or supply gets ahead of the other. This approach feels deliberate. Build the rails first, then invite heavier traffic. It is slower but also more sustainable.

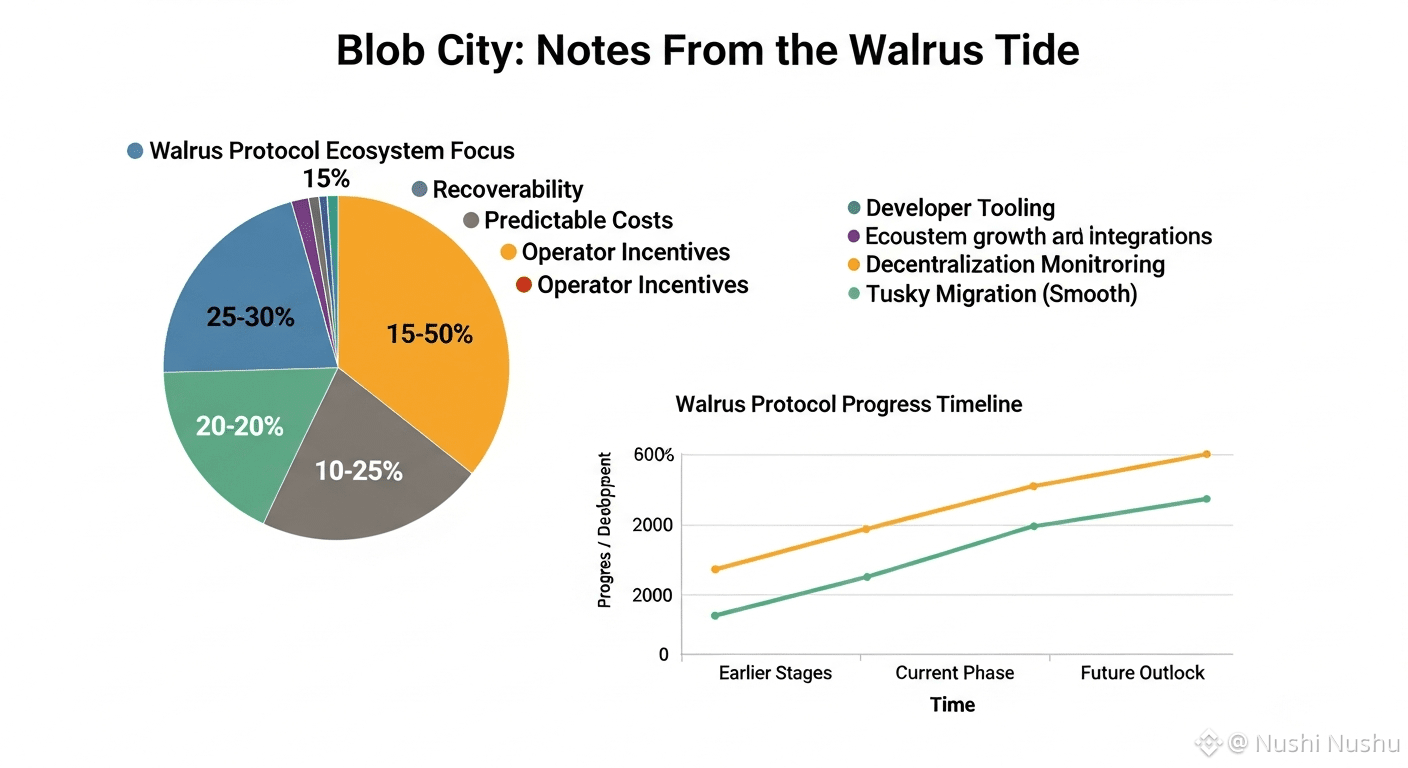

On the technical side, the storage design deserves attention. Walrus treats large blobs as the core product, not an edge case. The recovery model is built so data can be reconstructed efficiently even when parts of the network are unavailable. Instead of brute force replication everywhere, the system focuses on recoverability and predictable performance. For anyone running nodes or serving large datasets, this changes the cost and reliability equation in a real way. Faster recovery means fewer edge case failures and less operational stress when something goes wrong.

Ecosystem growth has been quiet but consistent. Integrations across content platforms, compute layers, and delivery tooling have been rolling out without overhyping. These integrations matter because they reduce friction. Less custom glue code means developers can actually ship products instead of babysitting infrastructure. This is the difference between experimentation and adoption. When storage plugs in cleanly, teams stop treating it as a risk surface.

Token mechanics are another area worth watching closely. The token is positioned around storage payments, operator incentives, and governance participation. Distribution phases are public, which helps anyone modeling long term participation instead of guessing. There are signs the team is still refining how pricing and incentives interact so storage remains predictable while operators stay properly rewarded. That balance is where most storage networks struggle and quietly fail.

Zooming out, this feels focused on utility over narrative. A lot of earlier storage projects talked endlessly about decentralization but never solved usability. Walrus is aiming to make blob storage first class with availability guarantees, developer friendly tooling, and economics that do not collapse under real workloads. If that direction holds, it becomes relevant for AI datasets, media libraries, research archives, and applications that need data to behave consistently over long periods of time.

That said, it is not risk free. Operator decentralization is something to monitor as the network scales. The balance between independent operators and larger infrastructure providers will define how resilient the system is in practice. Pricing assumptions also need to be tested under load. Cheap storage claims mean nothing if bandwidth and recovery costs spike later. Gradual adoption with real data is the smart move here.

Export and verify existing data early. Run real world trials with large files and intentionally test recovery scenarios. Model how token usage fits into operating costs instead of treating it as a secondary detail. Track ecosystem integrations that directly improve delivery reliability and latency because those are the pieces that decide whether something works in production.

Final take. This does not feel like hype driven infrastructure. It feels like plumbing being done properly. That is rarely exciting but it is exactly what matters when applications depend on data availability. If the focus stays on recoverability, predictable costs, and operator incentives, this could quietly become a storage layer many projects rely on without thinking twice.

This is one of those cases where progress is subtle but meaningful, and that usually ages better than noise.

Articolo

Blob City: Notes From the Walrus Tide

Disclaimer: Include opinioni di terze parti. Non è una consulenza finanziaria. Può includere contenuti sponsorizzati. Consulta i T&C.

WAL

0.0956

-7.27%

0

3

52

Esplora le ultime notizie sulle crypto

⚡️ Partecipa alle ultime discussioni sulle crypto

💬 Interagisci con i tuoi creator preferiti

👍 Goditi i contenuti che ti interessano

Email / numero di telefono