Hey, have you ever paused to consider what actually happens behind the scenes when you upload a large file to a decentralized network? With so many nodes coming and going, keeping data both safe and accessible without massive redundancy feels like a tricky balance. Walrus approaches this challenge through its specialized storage architecture built on Sui, emphasizing efficient encoding, verifiable proofs, and structured coordination that prioritizes real-world resilience over complexity.

At its core lies Red Stuff, Walrus’s two-dimensional erasure coding scheme. Instead of treating the blob as a simple linear string, it organizes the data into a matrix. Primary encoding happens along columns, producing intermediate results where row segments become primary slivers. Secondary encoding works along rows, generating secondary slivers from column segments. Each storage node then receives a unique pair—one primary and one secondary sliver. This setup creates interesting recovery properties: to reconstruct a lost sliver, nodes only need to pull data proportional to a single sliver’s size rather than the entire blob.

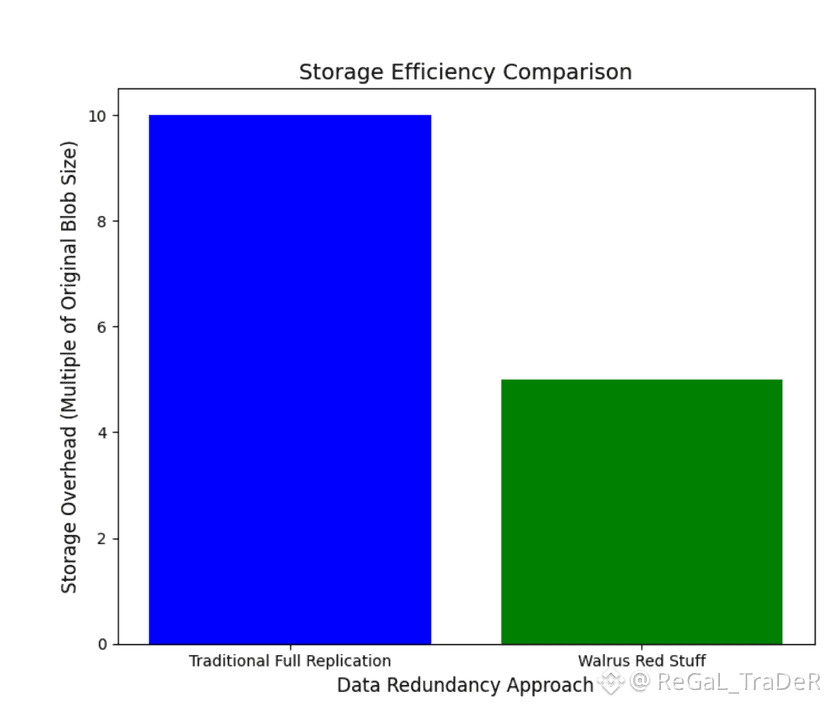

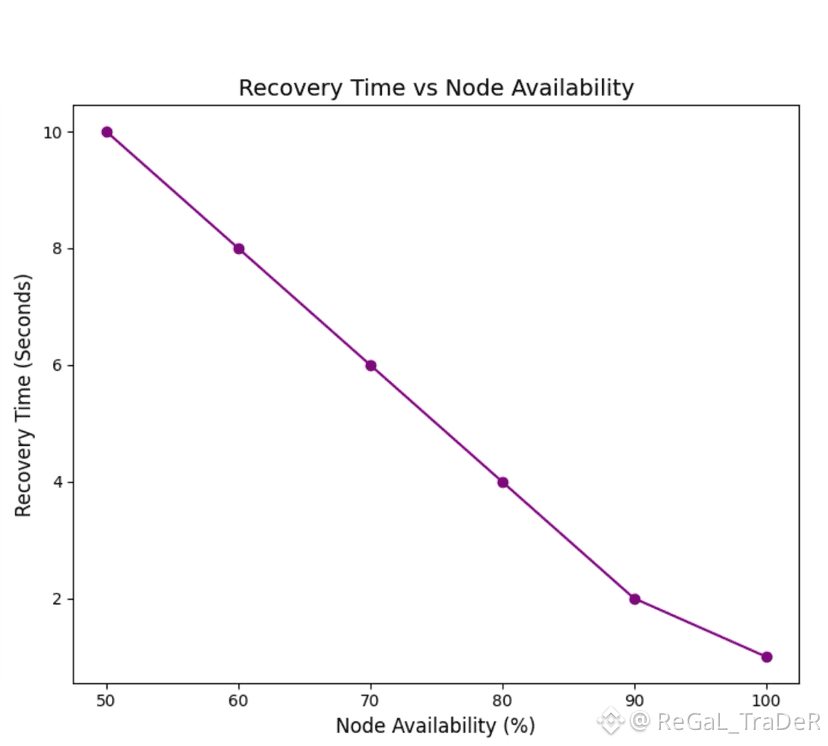

The self-healing aspect stands out in dynamic environments. When a node drops out, recovery leverages quorum thresholds—typically querying about one-third of peers for secondary slivers or two-thirds for primary ones. This keeps bandwidth low and reconstruction lightweight, even with node churn. Overall storage overhead lands around five times the original blob size, offering durability comparable to full replication but at a fraction of the cost. Vector commitments add a cryptographic layer: each sliver has its own commitment, rolled up into a top-level blob commitment that serves as a tamper-resistant fingerprint. Anyone can verify specific positions without downloading everything.

This encoding pairs with Proof of Availability, or PoA, which turns initial custody into an onchain certificate on Sui. During the write process, the client encodes the blob, distributes slivers to the committee, and collects quorum signatures after nodes verify commitments locally. These signatures form a write certificate submitted as a Sui transaction, marking the official start of storage obligations. From there, the blob becomes a programmable object on Sui, with metadata tracking ownership, duration, and extensions.

The broader architecture reinforces these mechanisms. Storage happens in fixed epochs—about two weeks on mainnet—during which shard assignments to nodes remain stable. Committees form via delegated proof-of-stake using $WAL, where higher stake increases selection likelihood and reward share. The system assumes more than two-thirds of shards are held by honest nodes, tolerating up to one-third Byzantine faults both within and across epochs. Users interact directly or through optional untrusted components like aggregators that reconstruct and serve data via HTTP, or caches that act as content delivery helpers. Publishers can simplify uploads by handling encoding and certification on the user’s behalf, though users retain the ability to audit everything onchain.

What really makes this design thoughtful is how it separates concerns: Walrus manages the data plane for encoding, storage, and retrieval, while Sui acts as the control plane for economics, proofs, and metadata. This division improves efficiency without sacrificing verifiability. In high-churn networks, the granular recovery and low-overhead repairs help maintain performance that feels closer to centralized clouds than typical decentralized alternatives.

From my perspective as someone analyzing blockchain architectures, Walrus’s approach demonstrates a mature focus on practical trade-offs. The 2D coding and PoA together provide verifiable availability without forcing excessive replication or bandwidth waste, which is crucial as data volumes grow. It avoids overpromising universality, instead delivering targeted reliability for large unstructured blobs. Of course, success will depend on sustained honest majority stake distribution and effective slashing enforcement over time. Still, the elegance in balancing efficiency, fault tolerance, and programmability positions it as a solid foundation for data-intensive applications.