When people talk about AI infrastructure in crypto, the conversation often starts from capability. More throughput. Faster execution. More flexibility. The assumption is that if a system can do more things, it is automatically better suited for AI.

Vanar approaches the problem from a different direction. Instead of asking what the system can do, it asks what the system must not break when AI systems depend on it.

This distinction matters more than it sounds.

AI does not fail because it lacks power

It fails because environments behave inconsistently

Most AI systems are not limited by raw computation. They fail when the environment they operate in becomes unpredictable. Small changes in execution cost, settlement timing, or state finality can cascade into incorrect decisions, retries, or halted workflows.

Traditional blockchain infrastructure tolerates this inconsistency because humans sit in the loop. Users wait. Traders adjust. Developers patch around edge cases.

AI systems do not do that well.

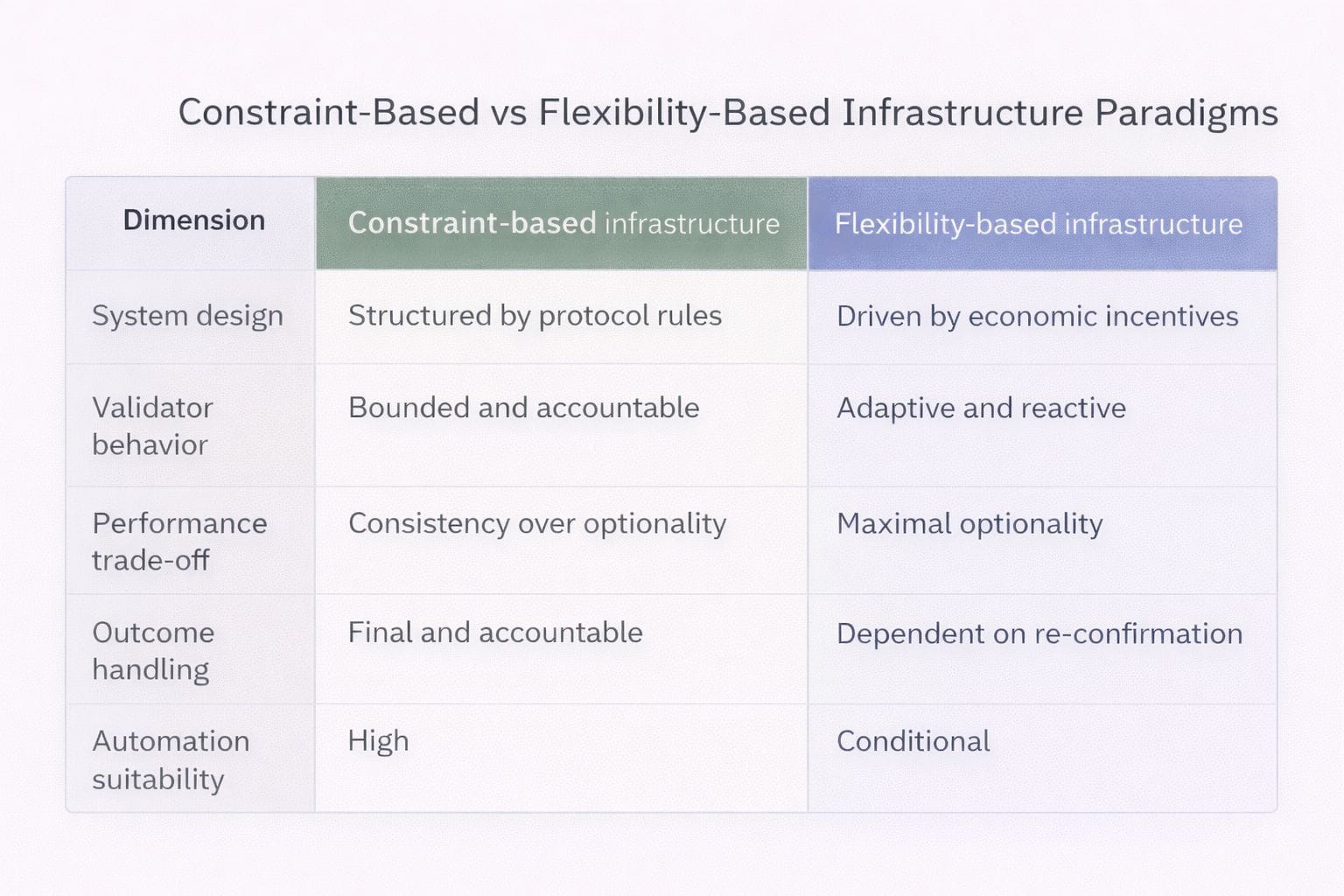

Vanar’s infrastructure appears to be designed with this constraint in mind. The network is structured to reduce variability rather than maximize optionality. That trade off is intentional.

Predictability is treated as a first class property

In many chains, predictability is a side effect of low usage. When traffic is light, fees are stable and execution feels reliable. Once load increases, predictability disappears and systems are expected to adapt.

Vanar does not treat predictability as a temporary condition. It treats it as a design requirement.

Fee behavior is meant to remain stable under load. Settlement outcomes are meant to be dependable once recorded. Validator behavior is constrained at the protocol level rather than left to reactive incentives.

This matters for AI because automation assumes continuity. An agent executing a sequence of actions cannot pause to reassess infrastructure conditions every time the network state changes. It needs guarantees that remain true across time, not just at specific moments.

Settlement is the core of AI infrastructure, not execution

A common misconception is that AI needs faster execution. In practice, AI needs trustworthy settlement.

Execution can happen off chain, in parallel, or asynchronously. What cannot be outsourced is the moment where outcomes are finalized and responsibility is assigned.

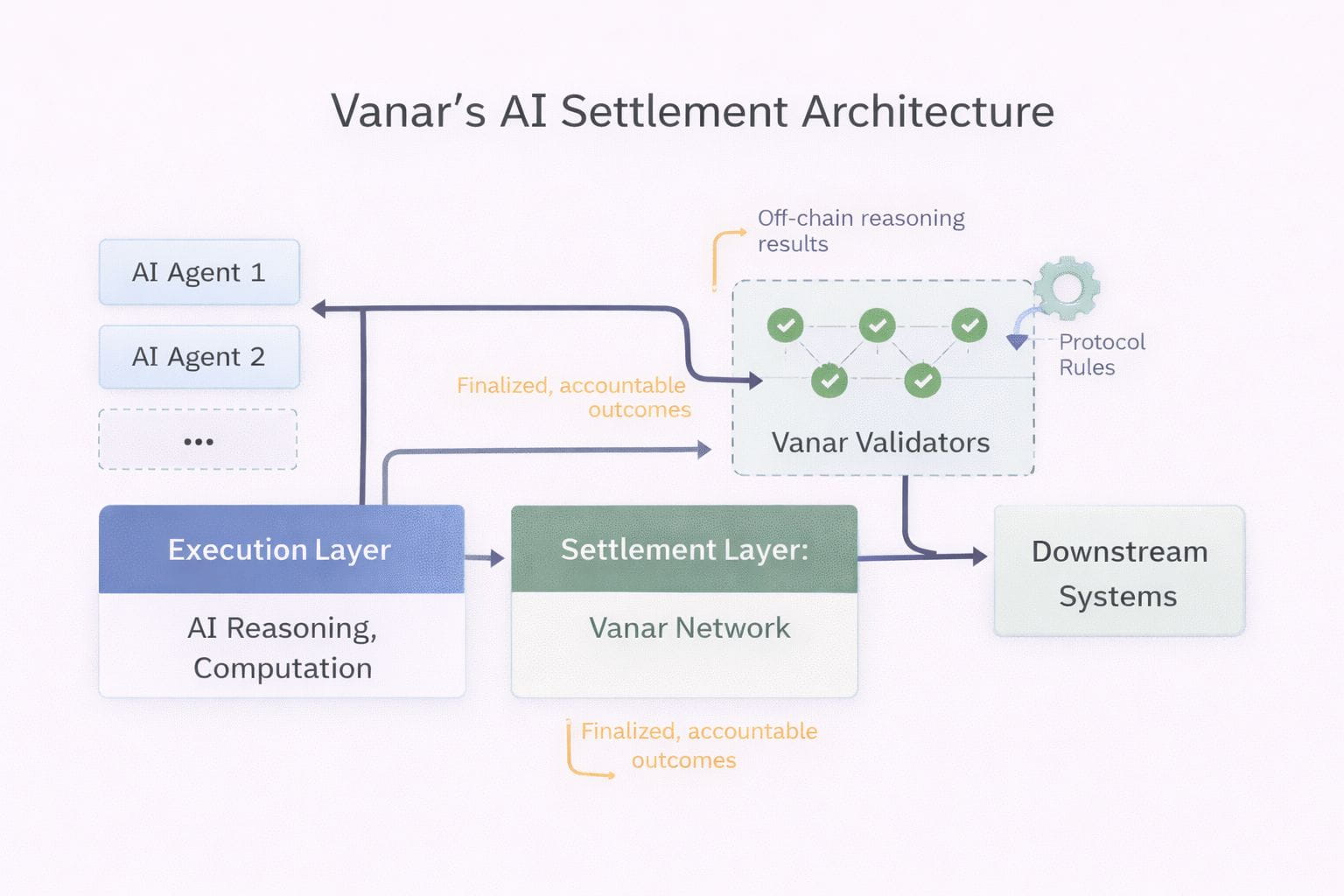

Vanar’s architecture centers on this moment. The infrastructure is optimized for clear state transitions, deterministic finality, and accountable behavior. This reduces the amount of defensive logic that AI systems need to implement around the chain.

From a systems perspective, this simplifies the entire stack above it.

Constraining behavior is more important than enabling everything

One of the more subtle choices in Vanar’s design is the emphasis on constrained behavior. Validators are not just incentivized to behave well, they are structurally limited in how they can react under stress.

This reduces short term optimization at the cost of long term consistency.

For human driven activity, this might feel restrictive. For AI driven systems, it is exactly what is needed. Consistency compounds. Variability does not.

Why this matters beyond theory

AI infrastructure only becomes visible when it is stressed. Demo environments rarely expose the real problems. Continuous operation does.

Vanar’s design choices suggest that it is being built for systems that do not stop, do not wait, and do not renegotiate assumptions every time network conditions change. That is a different target than most chains aim for.

Whether this approach succeeds will depend on adoption and real usage. But from an infrastructure standpoint, the direction is clear. Vanar is not optimizing for narratives or surface level performance metrics. It is optimizing for environments where AI systems must rely on the chain behaving the same way tomorrow as it does today.

That is a harder problem to solve, and a more interesting one.