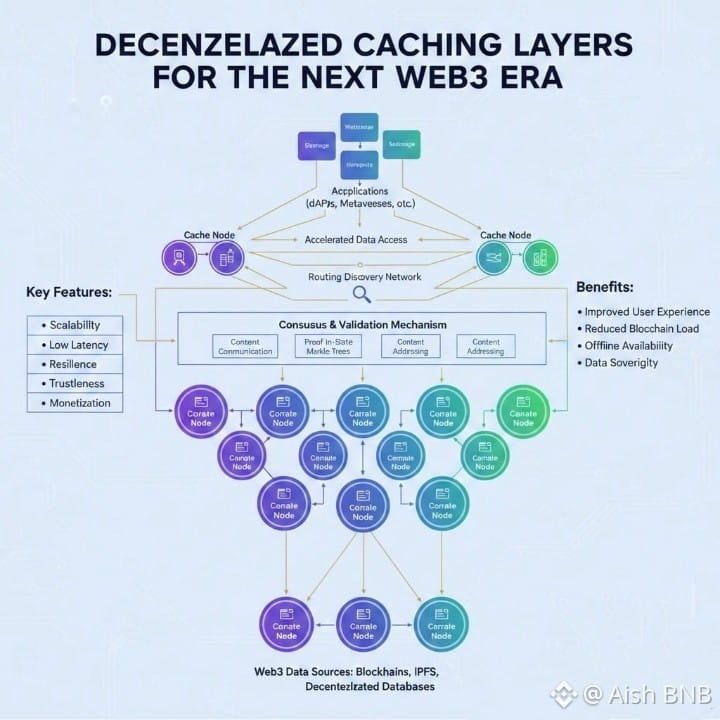

Everyone hypes decentralized storage as "your files live forever, no one can delete them." Cool, but here's the real talk: if reading that file takes 10 seconds because you're pulling pieces from 30 nodes across the globe, verifying each sliver, reconstructing, and hoping nothing corrupted—users are gone. Web3 dApps stay clunky and slow while Web2 loads in a blink because of caching, edge servers, CDNs. Walrus doesn't pretend that's not a problem. They're building a whole decentralized caching system on top so apps can feel fast without turning into another centralized middleman.

The way it clicks:

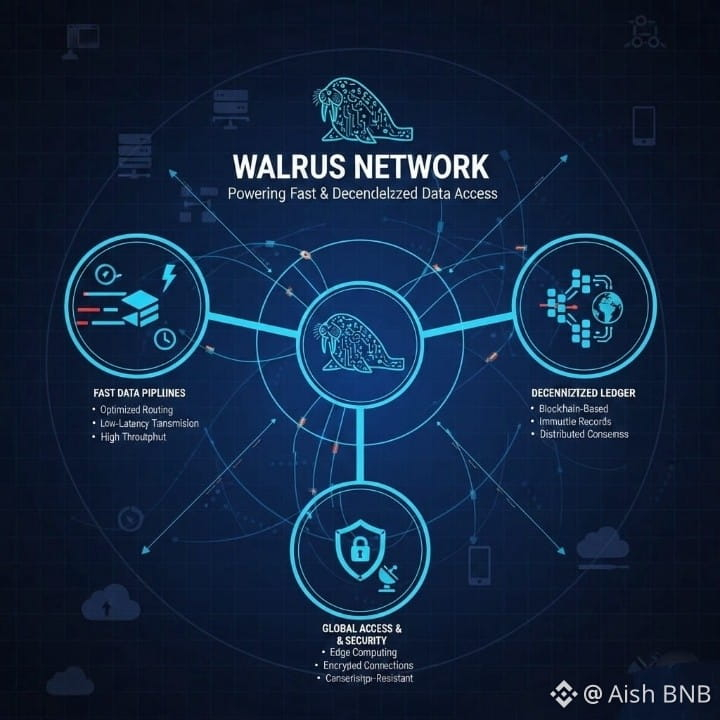

- Base storage: blobs get shredded with Red Stuff, spread to nodes, committees assigned, certified on-chain—standard Walrus reliability stuff.

- Then the killer layer: permissionless caches and aggregators anyone can spin up.

- Caches keep hot/popular data close to users (low-latency reads, regional edge style).

- Aggregators grab slivers, rebuild the blob, serve it over normal HTTP endpoints.

- Verifiability stays ironclad: client can always check "this cached copy matches the on-chain cert—no tampering, no funny business."

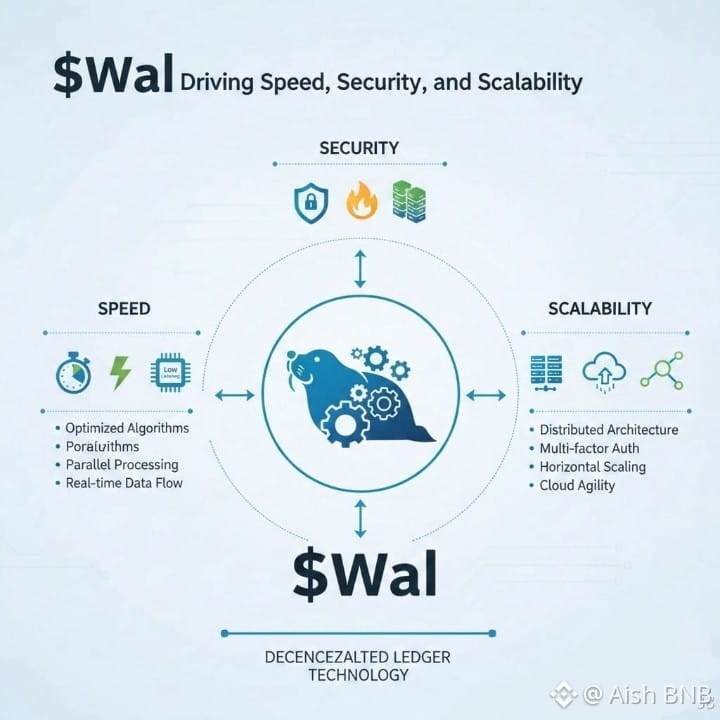

- No single company owns the cache network. Operators run their own nodes, get rewarded via $WAL incentives, but the system slashes them if they serve bad data or go offline.

This unlocks stuff that actually makes Web3 usable:

- NFT galleries load images in <1s instead of "fetching from nodes... still loading."

- Games pull skins, maps, assets fast—no lag spikes killing the experience.

- Media apps stream without constant buffering.

- Data markets answer queries quick while the original blob stays verifiable.

- AI agents grab datasets without waiting ages or risking stale/corrupted caches.

The smart part: Walrus didn't tack caching on later. They designed the roles from day one—publishers (pro uploaders), aggregators (rebuilders), caches (edge servers)—all permissionless, all incentivized. Operators can specialize: one guy runs low-latency caches for SEA users, another does high-throughput aggregation for media apps, someone else focuses on regional compliance or speed. Businesses can form around these roles. Uptime isn't "community hope"—it's someone's paid job. That's how you get Web2 speed with Web3 trust.

In Karachi 2026, where mobile data is expensive, internet drops randomly, and people want dApps that load fast (games, social feeds, NFT browsing, markets) without feeling like punishment—Walrus's caching layer could be the thing that finally makes decentralized apps compete on experience. Fast reads, no single point of failure, no censorship (can't block a distributed cache), proofs you can verify if you want.

$WAL fuels the whole thing: pays for base storage + caching/aggregation ops, rewards operators who keep things quick and honest, burns fees as real usage ramps. It's not just transaction gas—it's the economic glue for a service layer economy around data.

Most storage projects stop at "decentralized = good." Walrus goes "decentralized + fast + verifiable + someone gets paid to keep it fast." That's the underrated shift. The caching/aggregator layer turns Walrus from "nice storage" into "infrastructure you can actually build real products on." If you're sick of dApps that feel like 2010 websites because "decentralized means slow," Walrus might be the quiet thing that fixes that. This is how Web3 stops sucking on speed—decentralized caching with receipts.