The transition toward AI-first infrastructure is not simply a technological upgrade; it is a structural shift in how decisions are made, actions are executed, and responsibility is distributed. As automation moves beyond assistance and into autonomy, the question is no longer whether systems can act independently, but whether they should—and under what constraints. This is where Vanar enters the discussion, not as another execution layer, but as an experiment in embedding intelligence directly into the fabric of decentralized systems. That experiment exposes a core tension: the more capable autonomous agents become, the more essential privacy, transparency, and human oversight are to their legitimacy.

AI-native systems differ fundamentally from earlier automation paradigms. Traditional blockchains process discrete, well-defined inputs: transactions, balances, signatures. AI-native infrastructure, by contrast, operates on context. It ingests documents, intent, historical behavior, and probabilistic signals, then transforms them into decisions that can alter financial states, governance outcomes, or digital environments. Privacy in this setting is no longer about hiding balances or anonymizing addresses; it is about controlling how meaning itself is accessed, interpreted, and acted upon.

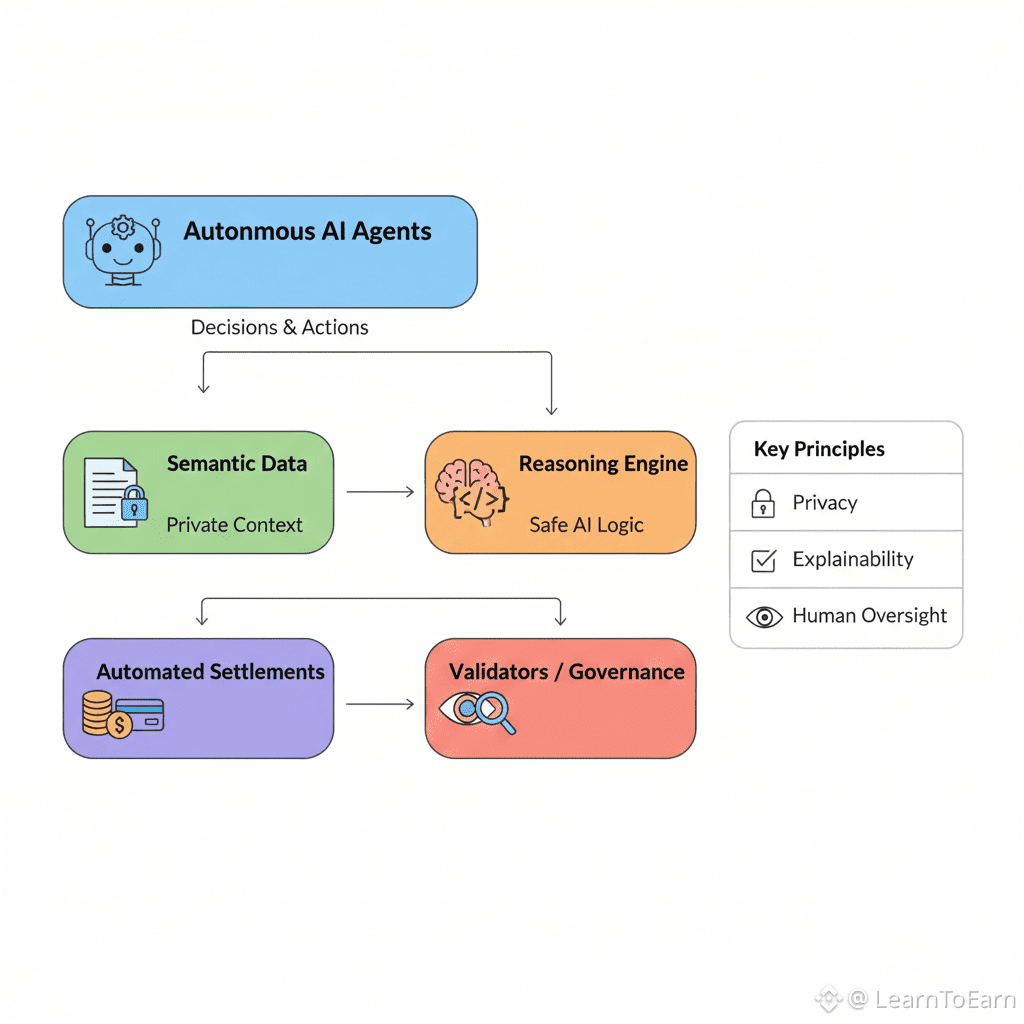

At the core of this shift is semantic data. When information is compressed into machine-readable representations that preserve intent and relationships, the surface area for risk expands dramatically. A system that understands a legal agreement or a financial obligation is also capable of misinterpreting it, over-generalizing it, or applying it outside its original context. Privacy, in this sense, is inseparable from epistemic restraint: limiting not just who can see data, but how far an automated agent is allowed to reason with it.

Autonomous action compounds this risk. Once reasoning systems are connected to execution layers, analysis is no longer theoretical. Decisions translate directly into payments, asset transfers, rule enforcement, or economic rebalancing. In such environments, failure modes are not abstract. A flawed inference can trigger cascading consequences across interconnected systems. Privacy breaches are no longer confined to data exposure; they become vectors for economic manipulation, coercion, or systemic bias.

This is where the idea of “hands-off” automation begins to break down. While AI excels at speed and pattern recognition, it lacks the situational awareness that comes from lived human experience. It cannot intuit social impact, reputational harm, or ethical nuance beyond what has been explicitly encoded. Treating autonomy as a substitute for accountability creates a dangerous illusion: that compliance can be automated without judgment, and governance can be reduced to code execution. In reality, removing humans from oversight does not eliminate risk—it obscures it.

The architectural choices within the ecosystem highlight this tension clearly. A unified semantic memory layer concentrates intellectual context in a way that is powerful but inherently sensitive. Reasoning engines that translate natural language into actionable logic introduce interpretive ambiguity. Automation frameworks that span gaming economies, virtual worlds, and payment flows blur the boundary between digital interaction and real economic consequence. In each case, privacy is not an optional feature but a structural requirement, because the cost of error scales with the system’s intelligence.

The integration of economic settlement completes the loop. Once automated agents can initiate and settle value transfers autonomously, opacity becomes unacceptable. At that point, the system is no longer just computing outcomes; it is participating in an economy. Economies require trust, and trust requires the ability to explain why something happened, not just that it happened. Speed and throughput matter far less than legibility and accountability.

This is where human oversight reasserts its importance—not as a bottleneck, but as a stabilizing force. Oversight does not mean micromanaging every automated action; it means designing systems that can surface reasoning paths, flag anomalies, and invite intervention when decisions exceed predefined risk thresholds. Validators, governance participants, and auditors serve as a social layer that contextualizes machine behavior within broader ethical and legal frameworks. Without this layer, autonomy drifts toward unaccountable power.

A sustainable path forward rests on a few core principles. First, automated agency must be explainable by design. Every significant action should leave behind a traceable record of the inputs, assumptions, and reasoning that produced it, in a form humans can inspect. Second, governance must be multi-stakeholder and continuous, not reactive. Oversight mechanisms should evolve alongside the intelligence they supervise, rather than lagging behind it. Third, data sovereignty must remain with users and institutions, ensuring that context is shared deliberately, not extracted opportunistically.

The broader implication is clear: intelligence without integrity is not progress. As AI systems gain autonomy, the defining challenge is no longer technical feasibility but moral and institutional alignment. Privacy, transparency, and human judgment are not obstacles to automation; they are the conditions that make it socially viable. In an economy increasingly shaped by autonomous agents, trust becomes the scarce resource. The systems that endure will be those that treat trust as infrastructure, not as an afterthought.

Why Public Blockchains Fail AI Privacy by Default and What a New Architecture Makes Possible

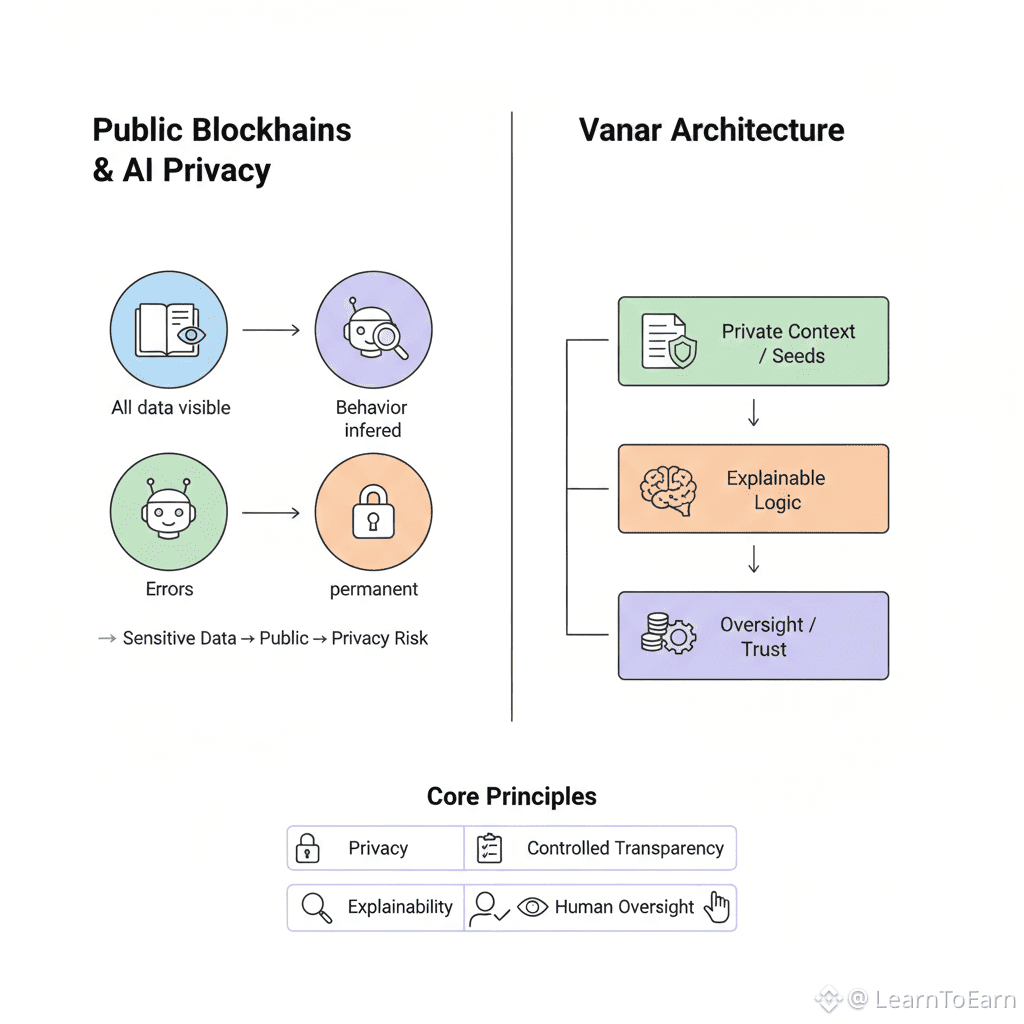

The defining feature of public blockchains has always been radical transparency. Every transaction is observable, every state change verifiable, and every interaction preserved indefinitely. This model worked when blockchains were primarily financial ledgers. It breaks down the moment artificial intelligence becomes a first-class participant in the system.

AI does not merely move value; it reasons, learns, and acts on sensitive context. It consumes private documents, behavioral patterns, internal logic, and probabilistic inferences. When such systems are deployed on infrastructure designed for universal visibility, privacy failure is not a bug — it is the default outcome. This is not a tooling problem or a missing feature. It is a foundational mismatch between public ledgers and intelligent automation.

The Privacy Contradiction at the Core of Public Chains

Public blockchains assume that transparency creates trust. AI systems assume that confidentiality enables intelligence. These assumptions collide the moment an autonomous agent operates in the open.

An AI agent interacting with smart contracts leaves an observable trail: queries, parameter choices, timing patterns, and execution logic. Even without accessing raw data, external observers can infer intent. Over time, this creates a full behavioral profile of the agent itself. For enterprises, this is untenable. Strategy becomes legible. Competitive advantage evaporates. Confidential operations turn into public signals.

This exposure is not limited to businesses. Any AI system handling personal data — financial, medical, legal, or behavioral — risks leaking sensitive information through its on-chain footprint. Even encrypted inputs cannot fully mask inference patterns. In a transparent execution environment, privacy erodes through correlation.

Verifiability vs. Confidential Intelligence

Public chains are built around the idea that anyone should be able to verify everything. AI systems, by contrast, often require restricted visibility to function responsibly.

An intelligent agent may rely on proprietary models, private datasets, or regulated information. Full disclosure of inputs is neither legally permissible nor operationally safe. Attempts to bridge this gap using cryptographic techniques introduce friction. Zero-knowledge systems excel at validating static statements, but AI reasoning is dynamic, iterative, and probabilistic. Forcing intelligence into rigid proof frameworks compromises usability, scalability, or both.

The result is a permanent trade-off: either intelligence remains shallow enough to be publicly verifiable, or it becomes powerful enough to require secrecy — but cannot be trusted on public infrastructure. Public chains cannot resolve this tension without abandoning their core premise.

Immutability as a Liability

Immutability secures history, but AI systems evolve. Early reasoning errors, biased decisions, or improperly handled data can be permanently recorded. In a regulatory environment shaped by data protection laws and evolving ethical standards, irreversible storage becomes a liability.

If an autonomous system processes personal or sensitive data incorrectly, there is no mechanism for correction, deletion, or contextual revision. This clashes directly with modern privacy frameworks and exposes operators to long-term legal and reputational risk. A system that learns over time cannot coexist with an infrastructure that freezes every mistake forever.

A Different Starting Point for Intelligent Systems

Vanar approaches the problem from the opposite direction. Instead of adapting AI to public ledgers, it adapts infrastructure to the requirements of intelligence.

The core assumption is simple: meaningful AI requires controlled context. Intelligence should operate in environments where meaning can be processed without exposure, decisions can be audited without full disclosure, and actions can be settled without revealing the entire reasoning path.

This leads to a layered model where memory, reasoning, and execution are separated by privacy boundaries. Sensitive data is processed locally and abstracted into semantic representations. These representations preserve meaning while discarding raw content. Reasoning operates on context rather than documents. Automation executes outcomes while exposing only what is necessary for trust.

Crucially, transparency is applied selectively. Economic settlement and final state transitions remain verifiable. The cognitive process that led there does not need to be public to be accountable. Trust is derived from auditability and governance, not voyeurism.

Why This Architecture Matters

This shift unlocks entire categories of AI-driven activity that public chains structurally exclude.

Enterprises can deploy autonomous financial systems without broadcasting strategy. Games and virtual worlds can run adaptive economies without exposing player behavior to manipulation. Regulated industries can use intelligent agents without violating confidentiality laws. In each case, privacy is not an add-on — it is the precondition for participation.

More importantly, this architecture reframes decentralization itself. Decentralization does not require universal visibility; it requires distributed control, verifiable outcomes, and accountable governance. Privacy and decentralization are not opposites. They are complementary when intelligence enters the system.

From Public Ledgers to Private Intelligence Networks

The future of blockchain is not a single global spreadsheet where every thought is observable. It is a network of autonomous agents operating with constrained visibility, producing outcomes that can be trusted without exposing their internal cognition.

Public blockchains fail AI privacy by design because they equate openness with legitimacy. Intelligent systems demand a more nuanced definition of trust — one that accepts confidentiality, explainability, and human oversight as core primitives.

By starting from the needs of intelligence rather than the ideology of transparency, Vanar points toward that future. Not a louder chain, but a quieter one where privacy enables capability, and trust emerges from structure, not exposure.

Vanar Chain’s Vision for Confidential AI Workflows: Building the Trust Layer for an Intelligent Economy

The evolution of artificial intelligence is no longer defined by better predictions or faster analytics. The real inflection point arrives when AI systems begin to act—executing transactions, enforcing policies, reallocating resources, and shaping digital environments in real time. At that moment, a hard constraint becomes visible: intelligence cannot function responsibly without privacy. This is where most blockchain infrastructure fails, not due to poor implementation, but because its foundational assumptions are incompatible with confidential cognition.

Public ledgers were designed to make value movement observable and verifiable by anyone. Intelligent agents, however, operate on sensitive context: private documents, behavioral patterns, internal strategies, and probabilistic inferences. Broadcasting this context—or even the traces it leaves behind—undermines both trust and utility. The result is a structural deadlock: the more autonomous AI becomes, the less suitable transparent-by-default systems are for hosting it.

The Unmet Requirement: Privacy as Cognitive Infrastructure

AI systems do not merely store or transmit data; they interpret meaning. They draw conclusions from relationships, intent, and history. When such systems are forced to operate in environments where every interaction is exposed, privacy fails not at the level of raw data, but at the level of inference. Observers can reconstruct goals, strategies, and weaknesses without ever accessing explicit inputs.

For enterprises, this eliminates confidentiality. For individuals, it erodes agency. For regulated sectors, it creates immediate legal exposure. Most importantly, it prevents AI from moving into roles where discretion is essential—roles that humans occupy precisely because they can be trusted with sensitive information.

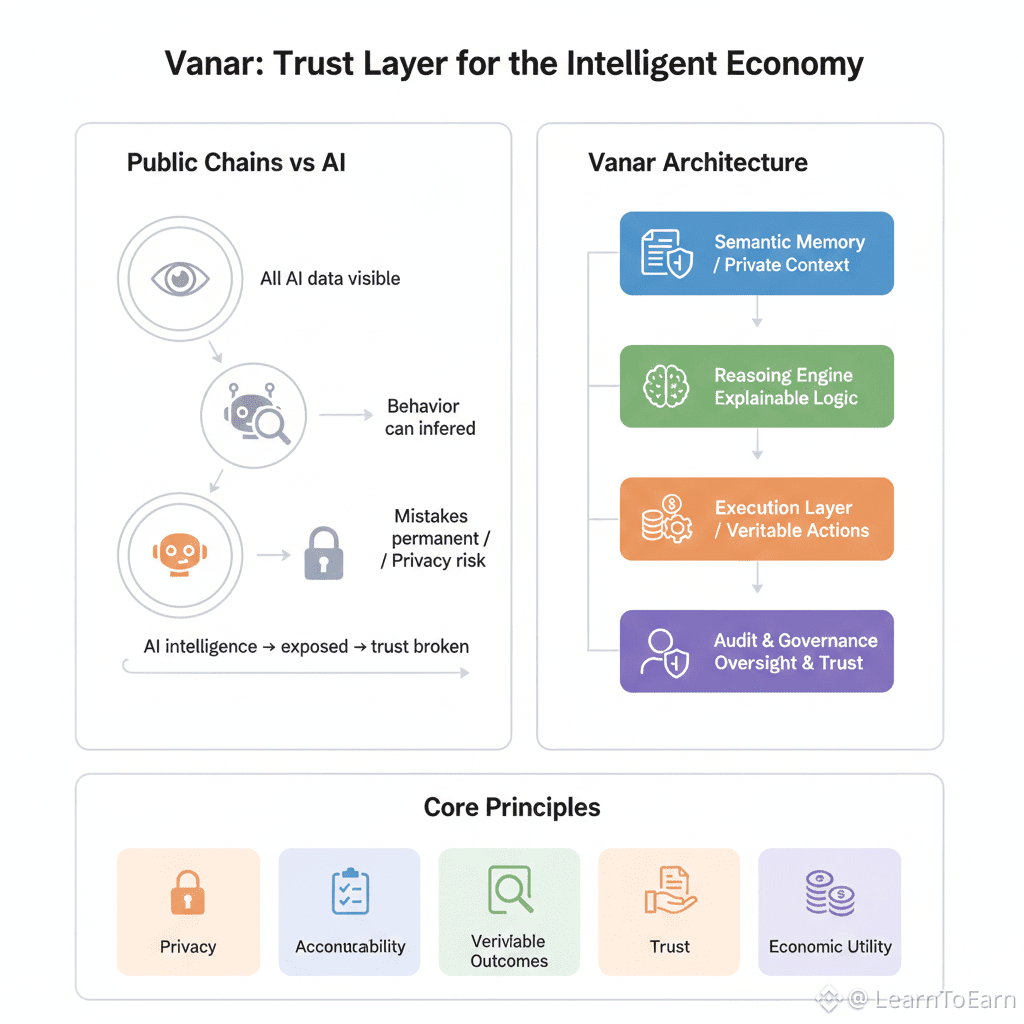

Vanar Chain is built around the recognition that intelligence requires selective opacity. Its vision reframes blockchain not as a universal glass box, but as a coordination layer where outcomes are verifiable even when cognition remains private. This distinction is subtle, but foundational.

Redefining Trust: From Visibility to Verifiability

The traditional blockchain model equates transparency with trust. In intelligent systems, this equation no longer holds. Trust must be derived from correctness, compliance, and accountability—not from exposing every intermediate step.

Confidential AI workflows require three properties simultaneously. First, sensitive inputs must remain protected throughout processing. Second, reasoning must be explainable in a way that supports oversight without revealing private context. Third, actions must be settled in a verifiable manner so that economic and governance outcomes remain auditable.

This shifts the role of infrastructure. Instead of enforcing universal disclosure, it must support controlled disclosure: revealing just enough to prove that rules were followed, without exposing the information those rules operated on.

A Layered Model for Confidential Intelligence

The architectural response to this challenge is separation. Memory, reasoning, and execution must exist in distinct layers, each with its own privacy boundary.

Semantic memory operates on meaning rather than raw content. Sensitive documents are transformed locally into contextual representations that preserve relationships and intent while discarding exposed data. These representations can be reasoned over without reconstructing the original material, allowing intelligence to function without direct access to confidential sources.

Reasoning layers translate context into conclusions. Their responsibility is not only to produce answers, but to justify them. Explainability becomes more important than observability. The system must be able to show why a conclusion was reached, even if it cannot reveal every input that contributed to it.

Execution layers convert conclusions into action. At this point, privacy gives way to accountability. The fact that an action occurred—and that it met predefined policy constraints—must be publicly verifiable. What remains private is the deliberative path that led there.

This is the core insight behind Vanar’s design: intelligence happens in private, responsibility manifests in public.

Why This Enables Real Adoption

Most AI-on-chain discussions fail because they ignore institutional reality. Enterprises do not operate in public. Healthcare systems cannot expose patient context. Financial strategies lose value the moment they are observable. Gaming economies collapse when internal mechanics can be reverse-engineered in real time.

Confidential AI workflows unlock these domains by aligning infrastructure with how intelligence actually functions in the real world. Agents can analyze private balance sheets, behavioral signals, or contractual obligations without turning them into public artifacts. At the same time, the outcomes of that analysis—payments, adjustments, settlements—remain subject to collective verification.

This balance is what allows autonomy without chaos. It preserves decentralization while acknowledging that discretion is not the enemy of trust, but its prerequisite.

The Economic Implication of Private Intelligence

When intelligent activity becomes private but outcomes remain verifiable, value accrues differently. Tokens no longer derive relevance from speculative visibility, but from their role in settling meaningful economic decisions. In this model, $VANRY functions as a settlement instrument for actions that only exist because confidential intelligence made them possible.

This reframes utility at a structural level. The network does not monetize attention or transparency; it secures outcomes produced by trusted cognition. The more the system enables real-world decision-making—enterprise automation, regulated workflows, adaptive digital economies—the more essential its settlement layer becomes.

Toward an Intelligent, Trustworthy Internet

The future of decentralized systems will not be built on radical exposure, but on deliberate restraint. Intelligence scales only when it is protected. Autonomy is only accepted when it is accountable. Trust emerges not from watching every step, but from knowing that steps can be examined when it matters.

Vanar Chain’s vision positions blockchain as the quiet infrastructure beneath intelligent activity—a system that enables agents to work with the discretion of humans and the reliability of machines. In such an economy, confidentiality is not a feature to be toggled on. It is the condition that makes intelligence usable at all.

If AI is to become a genuine participant in economic and social systems, it will require more than computation. It will require trust. And trust, in the age of autonomous agents, begins with privacy by design.