When I look at @Vanarchain I try to strip away the labels first AI chain, entertainment L1, green blockchain. Those are narrative wrappers. In live market conditions, the real question is simpler does capital remain when attention dissipates? What stands out immediately is that activity doesn’t resemble the usual feature-driven Layer 1 cycle. There’s no obvious emissions-fueled churn. Usage is quieter, more consistent, and noticeably sticky. That typically indicates infrastructure doing work users don’t want to migrate away from.

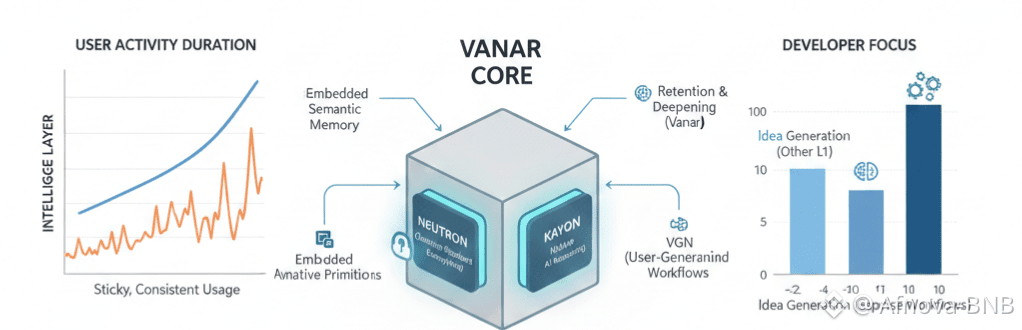

The next layer of analysis is where intelligence actually resides. Most chains treat AI as an externality APIs, off-chain inference, oracle-style integrations. Vanar takes a different approach. Components like Neutron and Kayon aren’t optional extensions they’re structural constraints. That matters. When intelligence is native, execution stops being the scarce resource. Interpretation becomes the bottleneck. At that point, traditional metrics like TPS lose relevance. What I care about instead is whether workflows deepen over time rather than reset.

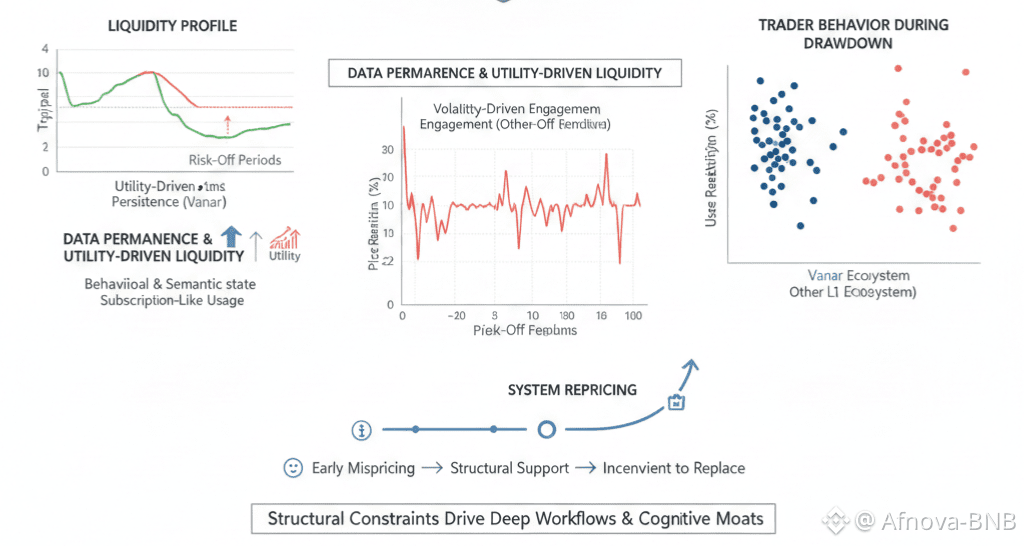

That’s usually where skepticism enters. Integrated intelligence is expensive, and expensive systems tend to externalize cost through inflation, subsidies, or complexity. What’s interesting here is that Vanar’s design shifts AI-related demand toward predictable, subscription-like usage instead of bursty transactional spikes. The liquidity profile changes as a result. Rather than volatility-driven engagement, you see utility-driven persistence. Markets tend to misprice that early because it doesn’t manifest as excitement it shows up as lower abandonment.

Developer behavior reinforces this view. Hackathons are less about idea generation and more about retention. The Vanguard Program isn’t just onboarding builders; it’s conditioning them to think in AI-native primitives. Once someone builds with embedded semantic memory and reasoning, porting that logic to a generic L1 isn’t trivial. That creates a soft moat not hard lock-in, but cognitive friction. Traders usually notice those only after ecosystems stop leaking users during drawdowns.

Security is where my assumptions shift again. Quantum resistance often reads like future-proofing theater. But upgrading Neutron’s encryption isn’t about imminent threats; it’s about data permanence. If a network positions itself as long-term storage for behavioral and semantic state, forward secrecy becomes foundational. At that point, Vanar looks less like a transaction network and more like a durable digital memory layer something markets don’t have a clean valuation framework for yet.

Liquidity behavior aligns with this thesis. I don’t see reflexive leverage chasing momentum. Liquidity thins during risk-off periods, but it doesn’t vanish. That usually suggests holders aren’t anchored to short-term narratives they’re anchored to system utility. It’s not loudly bullish, but it’s structurally supportive. Historically, that pattern tends to precede repricing rather than follow it.

The entertainment angle initially feels orthogonal until you remember that high-fidelity media stress-tests infrastructure before finance ever does. Real-time rendering, persistent identity, and user-generated economies create data complexity most DeFi never touches. The linkage between Vanar, Virtua, and VGN starts to look less like branding and more like systems validation. If intelligence holds under entertainment load, it’s far more likely to hold under enterprise workflows.

By the end of the analysis, the model shifts. Vanar isn’t competing for attention in the Layer 1 arena it’s competing for irreversibility. Systems that can understand, store, and reason over data create switching costs that never show up on charts. In fragmented markets, that’s usually where quiet winners form not by moving fast, but by becoming inconvenient to replace.