I've been watching blockchain infrastructure projects promise interoperability for years now. Most don't deliver. The ones that do usually compromise somewhere, either on actual technical integration or on economics that make sense when AI agents start transacting autonomously. When Vanar Chain announced their cross-chain expansion onto Base earlier this year, I didn't rush to conclusions because we've seen expansion announcements before that were just wrapped bridges with better marketing.

But something kept nagging at me about how Vanar Chain was actually deploying their AI stack across ecosystems. Not the narrative about it. The actual architecture patterns.

Right now the technical foundation matters more than price action. What's interesting is that Vanar Chain processed 11.9 million transactions across 1.56 million unique addresses without demanding that activity happen exclusively on their own infrastructure. The distribution pattern suggests these aren't speculative users hoping for airdrops. Someone's building real applications that require AI capabilities most blockchain infrastructure just can't provide.

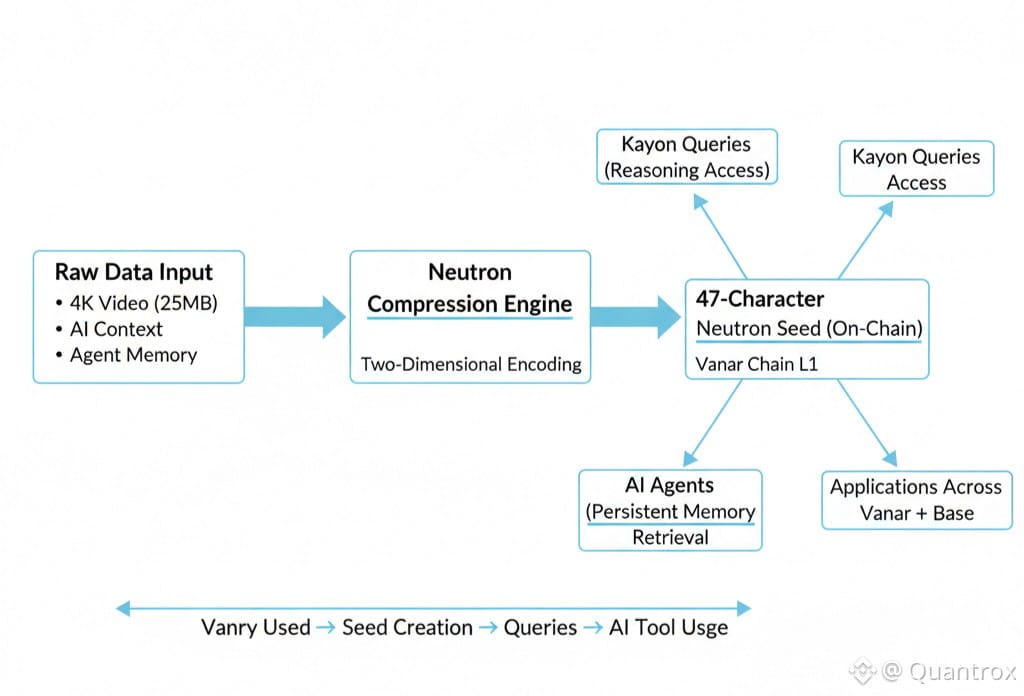

The protocol itself uses something called Neutron for compression. Two-dimensional encoding that brings storage overhead down dramatically, demonstrated live in Dubai last April when Vanar Chain compressed a 25-megabyte 4K video into a 47-character seed and stored it completely on-chain during a transaction. That efficiency matters because it's the only way AI context and memory work on blockchain without depending on centralized storage forever. Vanar Chain was built specifically for this, focusing on making intelligence native at every infrastructure layer rather than bolting AI features onto existing chains.

Developers building on Vanar Chain have to think differently about where data lives and how agents access it. That's their commitment showing. They earn utility when applications pay Vanry for AI infrastructure usage—every Neutron seed creation requires Vanry, every Kayon query consumes Vanry, and the AI tool subscriptions launching Q1 2026 denominate in Vanry. Standard token utility structure, nothing revolutionary there. But here's what caught my attention.

The partnership spread isn't random. It's deliberate in ways that suggest people thought about production requirements seriously. You've got NVIDIA providing CUDA, Tensor, Omniverse access. Google Cloud hosting validator nodes through BCW Group. VIVA Games Studios with 700 million lifetime downloads building on Vanar Chain for Disney, Hasbro, Sony projects. That diversity of serious technical partners costs more credibility to maintain than just announcing MOUs with no-name protocols. People are choosing Vanar Chain because they actually need infrastructure that works, not just partnership announcements.

Maybe I'm reading too much into partnership patterns. Could just be good business development. But when you're integrating production-grade AI tools from NVIDIA, every choice has technical implications. Enterprise partnerships mean dealing with different technical requirements, different compliance standards, different performance expectations. You don't get NVIDIA handing over development tools unless you're committed to the actual capability part, not just the AI narrative.

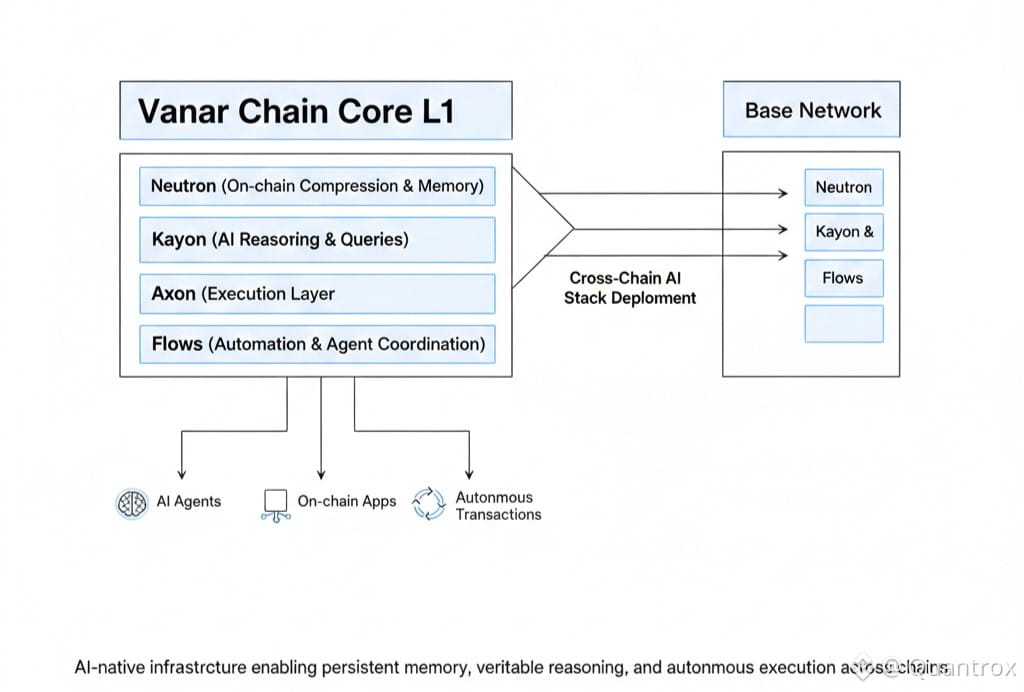

Vanar Chain deployed a complete five-layer AI stack before seeking validation. Real infrastructure developers could test whether Vanar Chain's coordination mechanisms between Neutron compression, Kayon reasoning, Axon execution, and Flows automation actually worked. That was shipping working products first, partnerships second. Not huge fanfare but enough to prove the system could handle actual AI workloads with applications that weren't just internal test cases.

Vanry token metrics don't tell you everything about infrastructure adoption. Trading happens for lots of reasons. What you'd want to know is how many AI agents are actually using these capabilities consistently, whether fee revenue from Neutron seeds and Kayon queries is growing, whether the economic model sustains itself without depending purely on speculation. Those metrics are harder to track but more important.

The circulating supply sits at 2.23 billion Vanry out of 2.4 billion max. So about 93% is already liquid with the rest distributing through block rewards over 20 years at predictable rates. That's unusual for projects this stage. No massive unlock events waiting. As emission continues slowly, you get minimal selling pressure unless demand from actual AI usage stagnates. The bet operators are making is that machine intelligence adoption scales faster than the small remaining token supply hits markets.

Here's what makes that bet interesting though. Developers building on Vanar Chain aren't just passive observers hoping AI narrative pumps their bags. They're integrating real infrastructure with real technical requirements. Persistent memory, on-chain reasoning, autonomous execution. If this doesn't work technically, they can't just pivot immediately. They're committed to architecture decisions until they rebuild, which takes time and has costs.

That commitment creates interesting dynamics. Developers who choose Vanar Chain aren't looking for quick narrative plays. They're betting on multi-year adoption curves where AI agent usage grows enough to justify infrastructure integration. You can see this in how they're building out applications like World of Dypians with over 30,000 active players running fully on-chain game mechanics. Not minimal implementations hoping to scrape by. Proper production deployment planning for scale.

The fixed transaction cost stays around half a cent. Predictable economics regardless of network congestion. That stability matters for AI agents conducting thousands of micro-transactions daily where variable gas fees would make operations economically impossible. When you're an autonomous system settling payments programmatically, you need consistency. Vanar Chain designed for that use case specifically, which is why the Worldpay integration exists connecting traditional payment rails to blockchain settlement.

The cross-chain strategy has Vanar Chain existing in multiple ecosystems simultaneously rather than demanding migration. Bringing myNeutron semantic memory, Kayon natural language queries, and the entire intelligent stack onto Base means AI-native infrastructure becomes available where millions already work. Vanar Chain's approach means developers don't choose between ecosystems—they get these capabilities wherever they already build. Trying to serve developers where they are while maintaining technical coherence creates interesting tensions. When capabilities exist natively on multiple chains, the question becomes whether this maintains advantage or just fragments attention.

This is where isolated Layer 1s still have advantages in some ways. Clear sovereignty, controlled environments, optimized performance. Vanar Chain is competing against that with a model that's objectively more complex technically. They're betting that enough applications care about AI capabilities that actually work, about persistent memory that stays on-chain, about reasoning that's verifiable, to justify the added architectural complexity.

My gut says most projects won't care initially. They'll take simple smart contracts and call it AI because markets reward narratives over substance. But the subset that does need real infrastructure, maybe that's enough. If you're building anything where AI agents need to maintain context across sessions, where reasoning paths need cryptographic verification, where automation needs to happen without constant human oversight, then Vanar Chain starts making sense.

The expansion onto Base through cross-chain deployment suggests at least serious developers are making that bet. Whether it pays off depends on whether the market for real AI infrastructure grows faster than hype cycles move on to the next narrative. Early but the technical foundation looks more substantive than most attempts at blockchain AI I've seen.

Time will tell if building beyond single-chain thinking works. For now Vanar Chain keeps processing transactions and applications keep using the AI stack. That's more than you can say for most "AI blockchain" protocols that are really just regular chains with AI mentioned in the documentation.