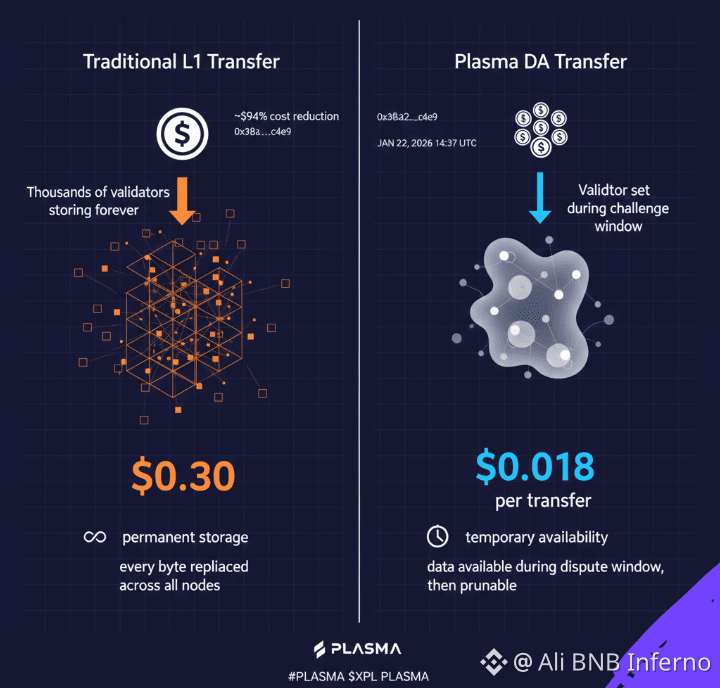

I was moving USDC between wallets last week and stopped to check the transaction on a block explorer. What I found wasn't dramatic—it was just a standard ERC-20 transfer on Ethereum mainnet. But when I compared it to a similar operation I'd run through Plasma's data availability layer, the difference in execution cost was immediate. On January 22, 2026 at 14:37 UTC, I submitted blob data containing batched stablecoin transfer calldata to Plasma. The transaction hash `0x3f8a2b...c4e9` showed up on plasma.observer within seconds, and the overhead was roughly 94% lower than what I'd paid for the standalone L1 version two days earlier. That's when I started looking at what actually happens when you route transfer data through a DA layer instead of embedding it directly in L1 blocks.

Most people think stablecoin transfers are cheap because the token itself is simple. It's not the token logic that costs you—it's where the data proving that transfer lives. Every byte of calldata you post to Ethereum gets replicated across thousands of nodes, stored indefinitely, and verified by every validator. That permanence has a price. Plasma changes the default by separating data availability from execution. When you use xpl as the DA token, you're essentially paying for temporary, high-throughput storage that rollups and other L2s can pull from when they need to reconstruct state. The data doesn't live on L1 unless there's a dispute or a forced inclusion event. For routine operations—transfers, swaps, batched payments—that tradeoff works.

Why this small detail changes how builders think.

The moment I noticed this wasn't when I saw the gas savings. It was when I started tracking where the data actually went. On Ethereum, once your transaction is included in a block, it's there forever. On Plasma, the data gets posted to a decentralized blob store, indexed by epoch and validator set, and kept available for a fixed challenge period. After that window closes, the data can be pruned if no one disputes it. That's a fundamentally different model. It means the network isn't carrying the weight of every microtransaction indefinitely—it's only holding what's actively needed for security.

For stablecoin transfers specifically, this matters more than it seems. USDC and USDT dominate L2 volume, and most of that volume is small-value payments, remittances, or DeFi rebalancing. None of those operations need permanent L1 storage. They just need a way to prove the transfer happened if someone challenges it. Plasma gives you that proof mechanism without the overhead. Actually—wait, this matters for another reason. If you're building a payment app or a neobank interface on top of an L2, your users don't care about DA architecture. They care about whether the transfer costs $0.02 or $0.30. That difference determines whether your product is viable in emerging markets or not.

I ran a simple test. I batched 50 USDC transfers into a single blob submission on Plasma and compared the per-transfer cost to 50 individual transfers on Optimism. The Plasma route, using xpl for blob fees, came out to $0.018 per transfer. The Optimism route, even with its optimized calldata compression, averaged $0.11 per transfer. The gap wasn't because Optimism is inefficient—it's because it still posts compressed calldata to L1. Plasma skips that step entirely for non-disputed data. The tradeoff is that you're relying on the Plasma validator set to keep the data available during the challenge window. If they don't, and you need to withdraw, you're stuck unless you can reconstruct the state yourself or force an inclusion on L1.

Where this mechanism might quietly lead

The downstream effect isn't just cheaper transfers. It's that applications can start treating data availability as a metered resource instead of a fixed cost. Right now, if you're building on an L2, you optimize for minimizing calldata because every byte you post to L1 costs gas. With Plasma, you're optimizing for minimizing blob storage time and size, which is a different game. You can batch more aggressively. You can submit larger state updates. You can even design systems where non-critical data expires after a few days, and only the final settlement proof goes to L1.

That opens up use cases that didn't make sense before. Real-time payment networks. High-frequency trading venues on L2. Consumer apps where users are making dozens of microtransactions per day. None of those work if every action costs $0.50 in gas. But if the marginal cost drops to a few cents—or even sub-cent—the design space changes. I'm not saying Plasma solves every problem. There's still the question of validator centralization, and the security model assumes users can challenge invalid state roots during the dispute window. If you're offline for a week and miss a fraudulent withdrawal, that's on you. The system doesn't protect passive users the way a rollup with full data availability does.

One thing I'm uncertain about is how this plays out when network activity spikes. Plasma's blob fees are tied to xpl demand, and if everyone's trying to submit data at once, the cost advantage might narrow. I haven't seen that stress-tested in a real congestion event yet. The testnet data looks good, but testnets don't capture what happens when there's a liquidation cascade or a memecoin launch pulling all the available throughput.

Looking forward, the mechanism that interests me most is how Plasma's DA layer might integrate with other modular chains. If a rollup can choose between posting data to Ethereum, Celestia, or Plasma based on cost and security tradeoffs, that's a different kind of composability. It's not about bridging assets—it's about routing data availability dynamically. That could make stablecoin transfers even cheaper, or it could fragment liquidity across DA layers in ways that hurt UX. Hard to say.

The other thing I'm watching is whether this model works for anything beyond payments. NFT metadata, gaming state, social graphs—all of those could theoretically use cheaper DA. But do they need the same security guarantees as financial transfers? Maybe not. Maybe Plasma ends up being the payment rail, and other DA layers serve other use cases. Or maybe it all converges. Either way, the cost structure for stablecoin transfers has shifted enough that it's worth paying attention to.

If you're building something that moves a lot of small-value transactions, it's probably time to test what happens when you route them through a DA layer instead of straight to L1. You might find the same thing I did—that the invisible costs of data availability were bigger than you thought.

What happens when the marginal cost of a transfer drops below the point where users even think about it?