@Dusk Selective disclosure is like having a private conversation in a glass building. You’re still in a shared space, but you control which window opens, when it opens, and who gets to look in. I keep returning to that image when I read Dusk Network’s recent material, because the project is explicitly trying to reconcile two instincts that usually fight each other on blockchains: keep financial activity confidential, while still making it provable when someone has a legitimate reason to verify it. Dusk itself frames the shift as moving away from radical transparency toward selective disclosure, and that’s a telling choice of words—it’s less about secrecy for its own sake and more about shaping what gets revealed and to whom.

This is trending now for a pretty practical reason: Europe is no longer treating crypto as a vague frontier. ESMA’s overview of MiCA emphasizes uniform EU market rules and highlights transparency, disclosure, authorization, and supervision as core elements for issuance and trading. Alongside that, ESMA notes the DLT Pilot Regime has applied since March 23, 2023, creating a legal framework for trading and settlement of certain tokenized instruments and enabling DLT-specific market infrastructures like a DLT MTF, DLT SS, and DLT TSS. When that kind of structure tightens, the demand changes: institutions don’t just ask, “Can we tokenize this?” They ask, “Can we do it without exposing our positions, counterparties, or client data to the entire internet?”

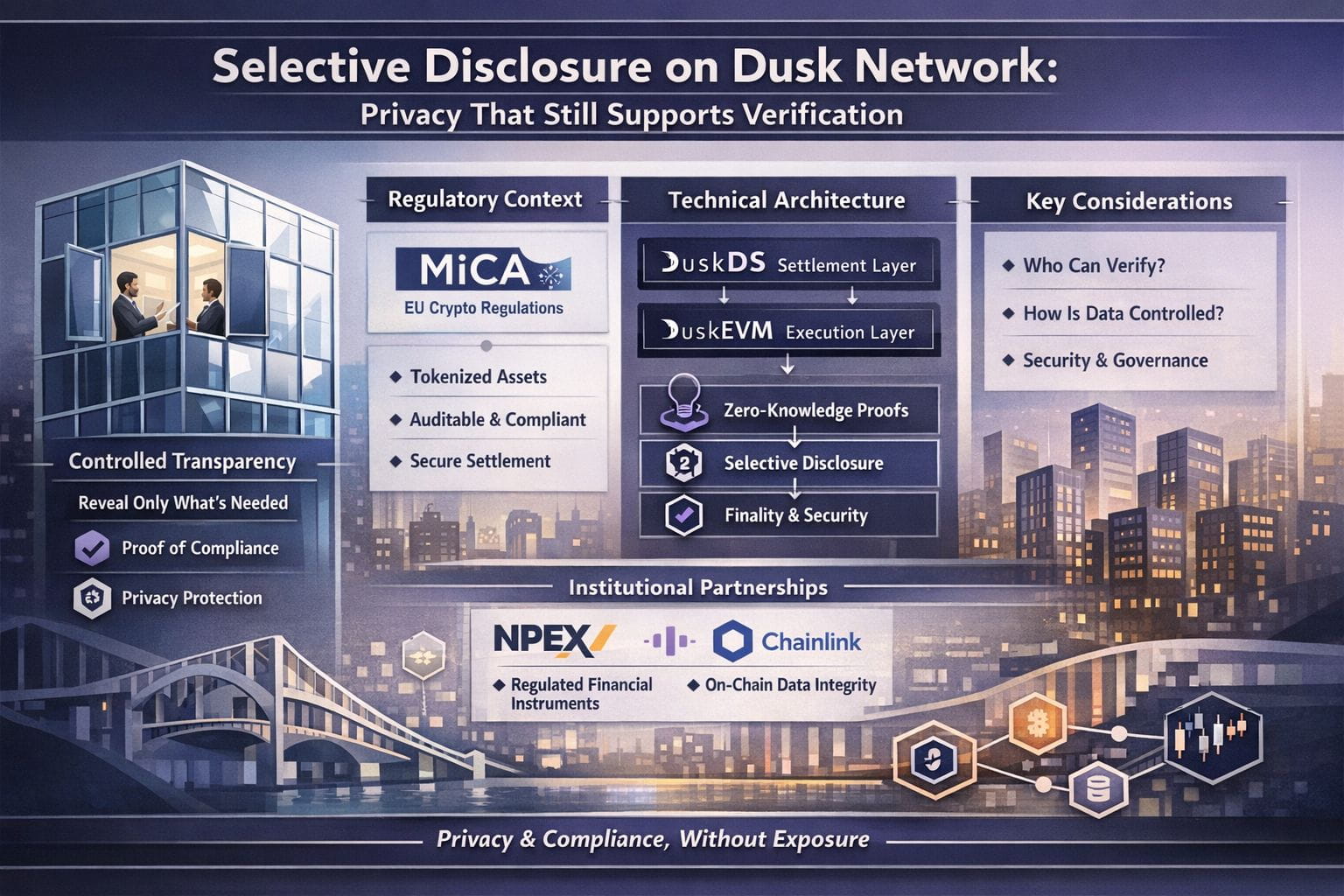

Dusk’s answer is to bake privacy into verification rather than bolt it on later. In its own writing, it describes “Zero-Knowledge Compliance,” where participants can prove they meet requirements without exposing personal or transactional details—an attempt to satisfy oversight needs without turning every trade into permanent public gossip. I find this framing unusually grounded, because it matches how regulated finance already works in the real world: sensitive information is shared on a need-to-know basis, and auditability comes from controlled records, not mass surveillance.

A lot of articles about privacy chains drift into abstractions, so it helps that Dusk is also fairly concrete about its architecture. Its documentation describes DuskDS as the settlement, consensus, and data-availability layer that provides finality and security for execution environments built on top, including DuskEVM. The DuskEVM deep dive spells out that separation directly: DuskDS handles settlement and availability, while DuskEVM is the EVM execution environment. In plain language, that modularity matters because selective disclosure isn’t just a feature; it becomes a property of how the system settles and proves correctness, not merely what an app interface claims.

This part lands because Dusk has actually had to live with the consequences of its design choices in the real world. In December 2024, the team shared a mainnet rollout plan that wasn’t vague or theatrical—it laid out practical steps, including early deposits, and pointed to January 7 as the moment the chain would start producing immutable blocks. Then in January 2026, a bridge issue put the spotlight on the unglamorous side of running infrastructure. Dusk’s message was basically: the mainnet is fine, there’s no protocol-level break, but we’re pausing bridge services while we tighten things up. It’s the kind of update you only appreciate if you’ve ever watched a system get tested outside a whitepaper..I tend to trust privacy projects more when they communicate like infrastructure teams: specific scope, clear impact, no theatrics.

Selective disclosure also becomes easier to picture when you look at what Dusk is building with partners. Dusk announced an agreement with NPEX around issuing, trading, and tokenizing regulated financial instruments, positioning it as a regulated collaboration rather than a purely crypto-native experiment. Later, Dusk framed the NPEX relationship as giving access to a suite of financial licenses and embedding compliance expectations into how the protocol is used. There’s also the Chainlink angle: reports about Dusk and NPEX adopting Chainlink interoperability and data standards describe the goal as bringing regulated European securities on-chain with reliable connectivity and data. If the ambition is to host regulated markets, selective disclosure stops being philosophical—it becomes operational policy expressed through proofs, permissions, and attestations.

None of this removes the hard questions. Who gets to request disclosure, under what process, and how do you prevent “verification” from quietly becoming a back door to blanket monitoring? Even strong cryptography can’t answer governance by itself. And the cryptography has to be treated as living software. Dusk’s own post on remediating a critical PLONK vulnerability—prompted by disclosure from Trail of Bits—lands as an important reminder that privacy systems earn credibility through how they handle flaws, not by pretending flaws don’t happen.

If Dusk succeeds, it won’t be because it made privacy fashionable. It’ll be because it made confidentiality compatible with the kind of verification regulators and market operators actually require—especially in a Europe shaped by MiCA and the DLT Pilot’s market-infrastructure model. The most honest test will be boring: audits, incident reports, and whether institutions can prove compliance without leaking their entire business to the world. That’s what selective disclosure should feel like at its best: privacy as dignity, verification as accountability, and a system designed to keep those two from tearing each other apart.