Last night, Ah Zu did something both boring and realistic at home: a 'clean-up' of the wallet he frequently uses.

MetaMask, Rabby, OKX Wallet were clicked through, and the Network List was filled with various 'Mainnet', 'Chain', 'L2', with Chain IDs ranging from 1, 56 to 137, 42161, 8453, scrolling endlessly on one screen. At first glance, it seems prosperous, like evidence of the 'multi-chain era', but upon closer inspection, it feels a bit absurd: for developers, most are just 'cheaper EVMs', and for regular users, it's just 'one more logo, one more RPC'.

At that moment, I suddenly felt a bit annoyed: do we really need so many copy-paste versions of Ethereum? If AI really wants to go on-chain in the future, which chain will it choose as its 'home'? Is it really just about which gas is cheaper? This question circled in my mind, and my mouse once again stopped at a number I had never paid attention to before—Chain ID: 2040, with the line below reading Vanar Mainnet.

I used to see it as 'just another EVM'. This time, I asked myself from a different perspective: If Vanar only wanted to achieve higher TPS and cheaper gas, there would be no need to push itself into the 'AI-ready L1' pit. Since it insists on waving the AI flag, what other things has it altered at the bottom layer that other EVMs are unwilling or unable to touch?

First, let's lift the surface layer. From a wallet perspective, the Vanar mainnet looks very simple: the Network Name is Vanar Mainnet, RPC and WSS are filled in normally, Chain ID is 2040, and the native token is VANRY. You can program and deploy using Solidity; the process with Hardhat, Foundry, and Tenderly is similar to Ethereum; when you open the block explorer, it’s still the interface you’re familiar with. All of this signals that Vanar has no intention of messing with developers on language and VM; instead, it chooses to hide all 'new things' beneath the EVM.

The problem lies in the phrase 'hidden beneath the EVM'. The default setting of traditional public chains is particularly simple and crude: the chain is only responsible for accounting and consensus. Whether you throw money, NFTs, order books, or a pile of model parameters at it, in its eyes, they are essentially a lump of bytes. It cares about quantity, source, and destination, but does not care whether these things have 'meaning' to machines; intelligence is all thrown onto off-chain servers, private databases, and project backends, with the chain at most recording a result hash or verifying a proof.

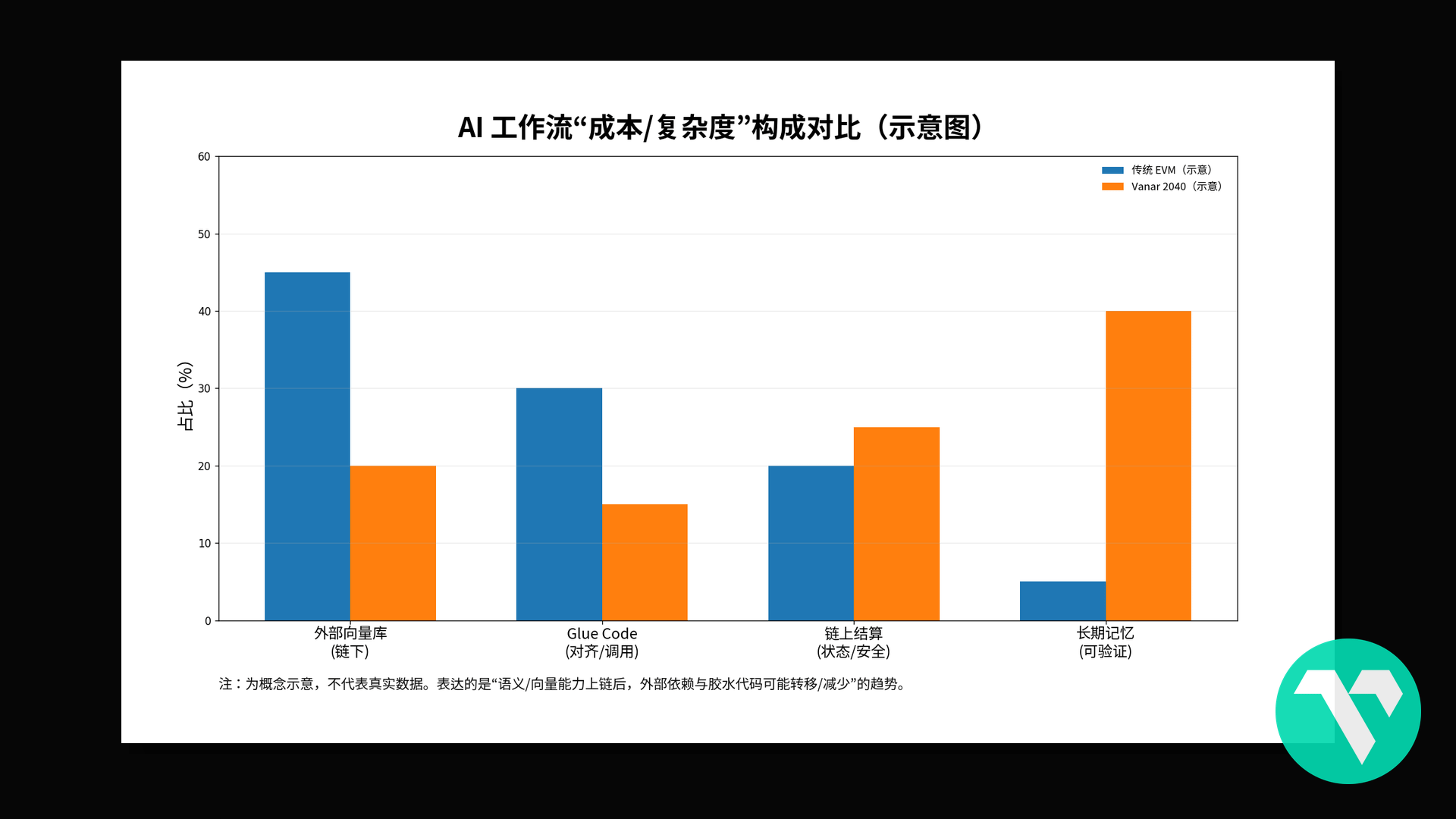

This setup runs DeFi and payments without issues, but once you shift the perspective to AI, it exposes an awkward reality: for the vast majority of chains, they can only serve as 'settlement outlets' for AI. Models run a bunch of inferences every day, then compress a hash on-chain for reconciliation; the truly valuable memories, semantics, and contexts are all locked in off-chain vector libraries and log systems. For AI to reuse yesterday's memory, it must first retrieve embeddings from a decentralized vector database and then cross-reference with the on-chain state, which is hardly 'AI on-chain' but more like 'using AI to patch an old-style ledger'.

The unsexy but critical thing that Vanar is doing at the bottom layer is overturning this default setting. It doesn't just simply say 'supports AI applications'; instead, it directly treats 'memory' and 'semantics' as first-class citizens in designing the chain's data structure. On the surface, it's still the familiar EVM chain, and 2040 is just a string of parameters when adding the network; the real difference is that it incorporates vector storage and semantic operations into the capabilities of the mainnet.

For AI, what's truly usable isn't a series of original documents but a series of embeddings. Many projects store the original text or hash on-chain while opening a vector library off-chain, relying on APIs to shuttle back and forth. Vanar's approach is straightforward: since most long-term memory in the future will exist in vector form, why not make room for these objects in L1, allowing them to be on-chain, indexed, and searched by similarity, rather than treating them as meaningless bytes stuffed into storage? On most chains, embeddings are external; on Vanar, embeddings are more like assets protected by the chain's native rules.

This directly influences its layout of states. Traditional EVMs resemble a massive key-value warehouse; if you know the key, you can get the value, and whether there's a semantic relationship among these values is of no concern to the virtual machine. Vanar's assumption is that the future chain will be filled with various AI memories, task trajectories, and preference configurations, which need to be recalled based on 'similarity' and 'semantic proximity', rather than relying on humans to remember a whole bunch of slots and mappings. Thus, many places you can't see have been rewritten towards a 'semantic-friendly' direction.

On this chain, a contract call no longer just reads and writes a few storage locations, but could potentially trigger a complete process of 'recalling a batch of related memories → updating according to rules → writing back on-chain'. The contract still has the interface signatures you’re used to, but the underlying organization of these memories has been restructured according to AI's working habits. You could crudely understand the difference as: other chains record money and states in the ledger, while Vanar attempts to record 'memory blocks and semantic fragments that AI can directly understand' on the ledger.

Once you acknowledge that 'memory' and 'semantics' are serious business, the performance metrics of the mainnet will naturally change. If you only run transactions, you can work hard on L2, push the state outward, and even sacrifice many engineering details for a TPS leaderboard. Once you need to bear the long-term memory and verifiable behavior records of AI, you must face heavier node loads, larger data throughput, and longer time dimensions. The specifications given by Vanar and the actual transaction volume produced are more aligned with 'long-term business' rather than piling parameters for a certain extreme peak.

From the perspective of emotional markets, this choice is not appealing at all. It can't tell a story about 'our TPS is X times higher, gas is much cheaper than others', nor can it easily associate with a bunch of meme coins on the same rally curve. More eye-catching narratives will always be about some coin skyrocketing several times or some application exploding in daily active users, while something like Vanar, which slowly twists screws from the bottom up, is easily categorized as 'boring'.

Going back to that set of parameters you casually skimmed over in your wallet: 2040 and VANRY. On the surface, they are just 'network number + gas token'; beneath this logic, they hide another dimension of meaning. 2040 is no longer just an integer in RPC configurations but a label in a memory coordinate system—when more and more AI-oriented applications choose to write embeddings, dialogue trajectories, preferences, and strategies onto this chain, 2040 will gradually become the code for 'AI memory hub': you can deploy the same contract and frontend on many EVMs, but only the one hanging on the state of 2040 is truly treated as the version for 'long-term reuse by AI'.

VANRY is not just 'the gas of this L1', but more like 'the electricity bill for AI reading and writing memories'. Every time you write a new semantic memory, compress a batch of vectors on-chain, or update the behavior trajectory of a certain agent, the consumed gas is partly for security, ensuring these memories cannot be casually rewritten and do not evaporate due to node downtime; the other part is for long-term usability, guaranteeing that ten or twenty years later, a certain intelligent agent can still retrieve them from the state tree of 2040. The question is no longer just 'is this transaction worth it', but 'is this memory worth being preserved long-term and exposed for future AI to continue using'.

If you believe one thing—that after 2026, AI will gradually evolve from a chat toy into a true 'on-chain worker' and 'long-term decision participant'—then you will eventually need to ask yourself: where will these workers' memories be stored on-chain? Keeping them in a centralized vector library is convenient, but that's someone else's warehouse; putting them on a public chain that doesn’t care about semantics might barely work, but you'd have to write countless layers of glue code to cater to its 'key-value world' setup.

Amid a pile of copied-and-pasted EVMs, Vanar at least did something others were too lazy or unwilling to do: acknowledge that AI needs memory, needs semantics, and needs long-term verifiable states. It then hardcoded these requirements into the foundation of a mainnet, from Chain ID and data structures to gas pricing. Whether or not to scan VANRY now is your choice; what I can do is lift the floor of 2040 for you to see what lies beneath.