There was a moment, after sitting through enough incidents, postmortems, and “community calls,” when I realized that most blockchain systems don’t actually fear failure. They fear silence after failure. The awkward pause when no one is sure who is responsible, what exactly broke, or whether the system itself is allowed to decide anything without human interpretation stepping in.

That is when I started paying attention not to how systems recover, but to how much attention failure demands when it happens.

Most infrastructure in crypto treats failure as an event. Something to be explained, coordinated around, voted on, patched, or narrated. Failed transactions become data. Reverted states become signals. Edge cases become discussion threads. Over time, failure stops being an exception and becomes part of the system’s daily behavior. Something everyone learns to work around.

Dusk takes a noticeably different position. It does not try to handle failure gracefully. It tries to make failure uninteresting.

That sounds counterintuitive at first. In engineering culture, resilience is often framed as how well a system responds when things go wrong. Fast recovery. Clear rollback paths. Transparent incident handling. Those are all valid goals, but they also assume that failure is something worth responding to at runtime.

Dusk seems to reject that assumption.

Instead of asking how the system should react when something invalid happens, Dusk asks a quieter question. What if invalid behavior simply never became something the system had to react to at all.

This is not a philosophical stance. It is embedded directly into how outcomes are allowed to exist.

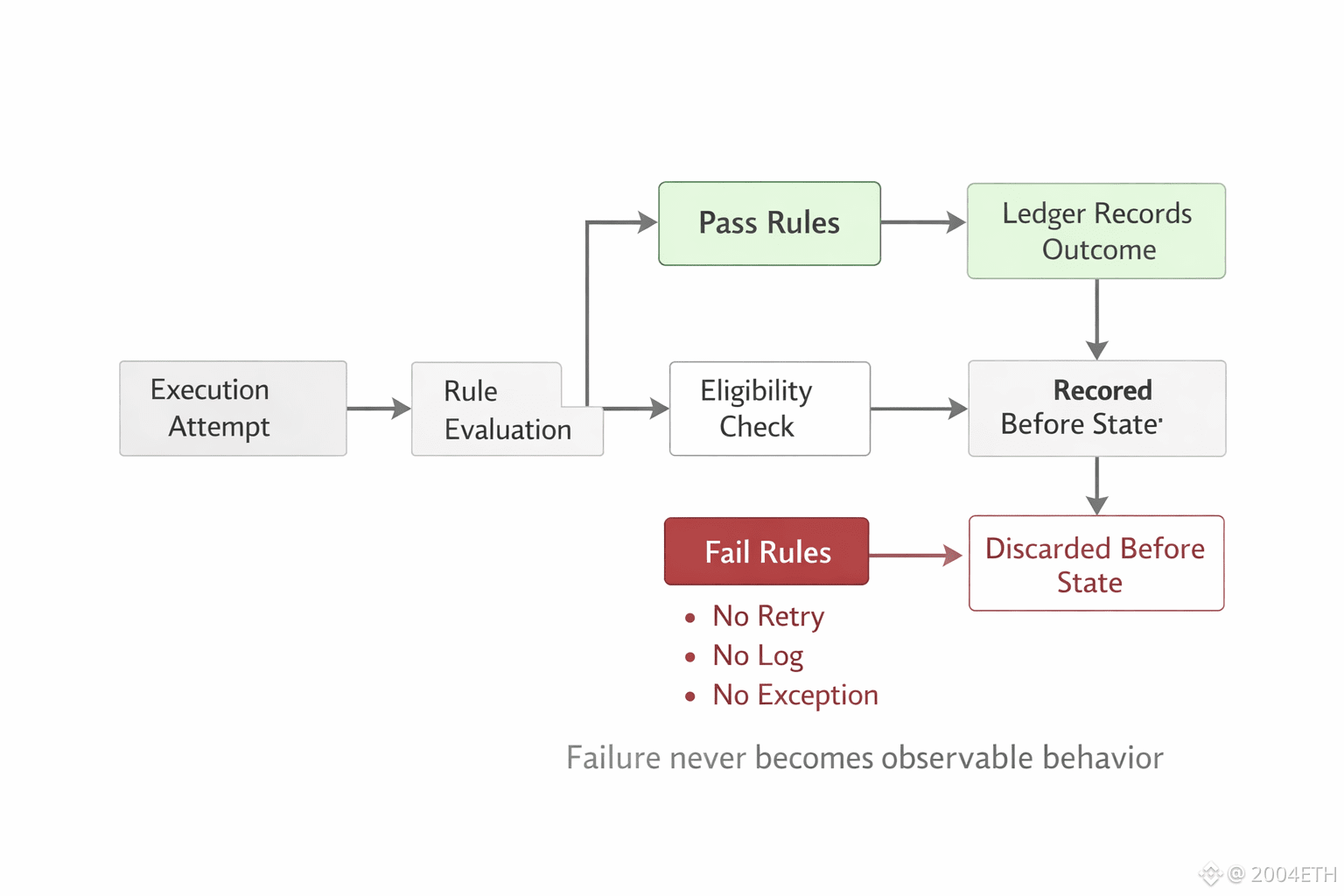

At the core of Dusk’s architecture, failure is not treated as a first class object. There is no concept of a failed state that needs later interpretation. No partial execution that leaves traces behind. No reverted history that analysts need to reconstruct. If a transaction or action does not satisfy the required conditions, it does not graduate into something observable. It does not demand attention. It does not become an event.

In other words, failure does not accumulate.

That choice immediately narrows the behavior space of the system. Many blockchains accept invalid attempts as part of the ledger’s story. They rely on tooling, indexing, and analytics to separate what mattered from what did not. Over time, the system becomes noisy. Observers learn to read around the noise, but the noise still shapes incentives, fee dynamics, and behavior.

Dusk refuses that feedback loop.

By enforcing rules before settlement, the system ensures that only outcomes that already qualify are allowed to exist. Everything else is excluded before it becomes data. There is nothing to retry, nothing to reconcile, nothing to monitor. Failure does not become a signal. It disappears.

This has a subtle but important effect. Systems that record failure invite optimization around failure. Actors probe boundaries. Bots test edge cases. Behavior adapts to what the system tolerates, not just to what it encourages. Over time, the network learns from noise that never should have existed in the first place.

Dusk’s design blocks that learning process entirely.

From the outside, this can look restrictive. Less visible activity. Fewer observable attempts. Fewer traces to analyze. But that absence is not accidental. It is the product.

What Dusk seems to optimize for is not correctness after the fact, but the absence of situations that require explanation later. If something cannot meet the rules, it does not leave behind artifacts that someone must interpret months down the line under different incentives.

This matters in environments where responsibility cannot be abstracted away.

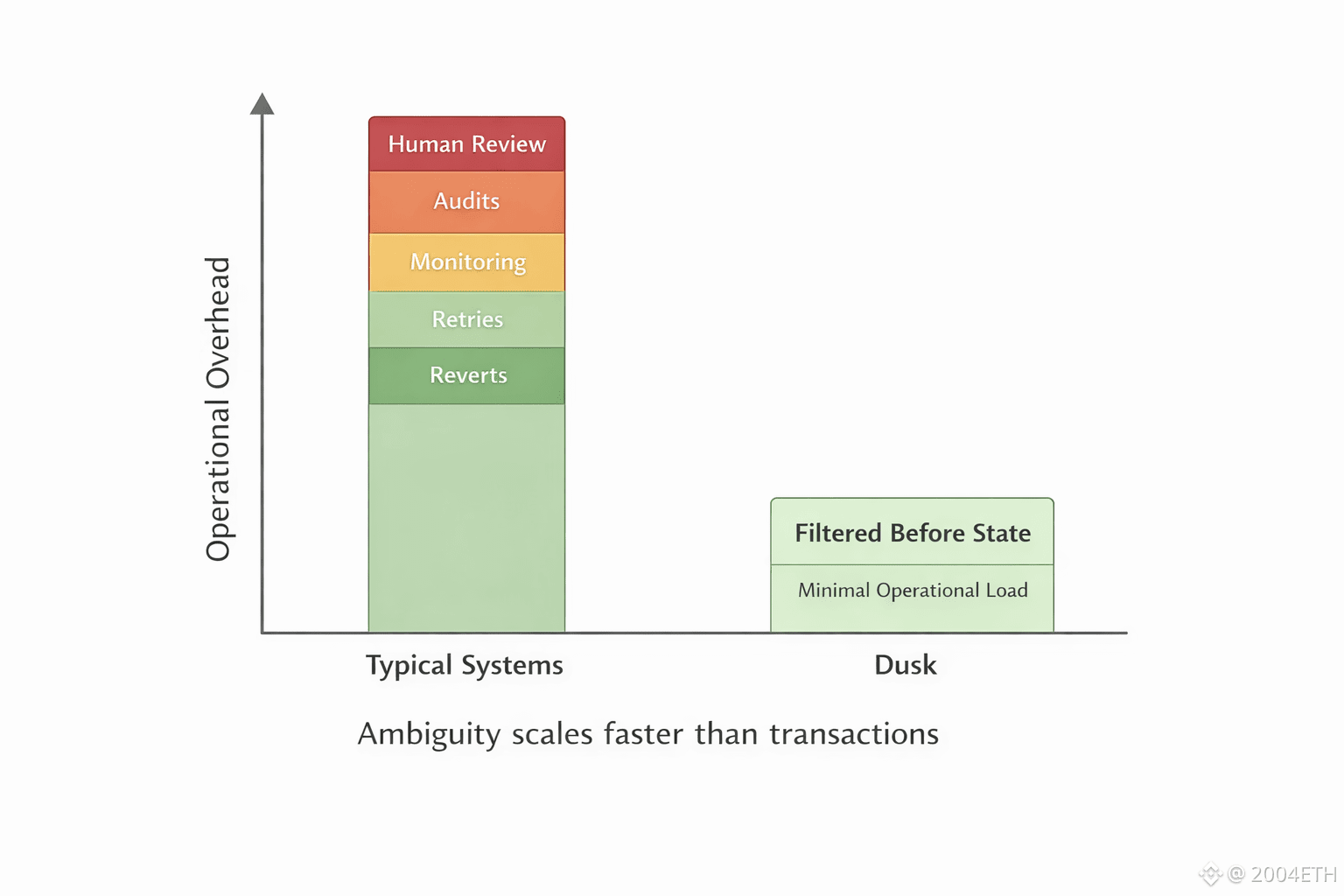

In real financial operations, failure is expensive not because it happens, but because of what happens next. Audits. Reviews. Reconciliation. Internal escalation. Human time spent explaining why something occurred and whether it should have been allowed. These costs do not scale linearly with transaction volume. They scale with ambiguity.

Dusk reduces that ambiguity by ensuring that failure never becomes part of the operational surface.

The execution layer still exists. Applications still run. Logic can still be expressive. Developers are not prevented from experimenting. But the system draws a hard line between experimentation and consequence. Execution may attempt many things. Settlement accepts very few.

Outcomes are candidates until they are proven acceptable. And unacceptable outcomes simply vanish.

This also explains why Dusk can feel quiet.

Quiet systems are often mistaken for inactive ones. In reality, quiet systems are often the result of aggressive filtering. Less makes it through. Not because nothing is happening, but because the system is unwilling to carry the burden of interpreting everything that could have happened.

That restraint introduces real trade offs.

Some classes of debugging become harder. Developers do not get a rich on chain history of failed attempts to analyze. Behavior that might be corrected through retries in other systems must be corrected before submission here. The protocol does not help you recover from invalid assumptions. It expects you not to make them.

That friction is real, and it will push certain builders away.

But the upside is operational clarity.

When failure does not persist, operations do not revolve around exception handling. There are fewer alerts, fewer escalations, fewer situations where humans are asked to decide what the system meant. Responsibility stays closer to the point of intent, not scattered across time and organizations.

I have seen enough systems where the hardest problems were not exploits or outages, but long tail failures that required interpretation long after the original context was gone. In those systems, failure was interesting. It generated discussions, governance motions, and competing narratives. Each layer added a little more uncertainty.

Dusk seems designed to avoid that entire category of problem.

It does not celebrate resilience. It avoids situations that require it.

This is not a guarantee of success. Making failure uninteresting does not make systems immune to error. It shifts where errors must be resolved. It forces discipline earlier. It removes safety nets that other systems rely on.

Markets often prefer flexibility over discipline. Stories are easier to tell when systems adapt visibly. Quiet refusal does not generate hype.

But infrastructure that lasts is rarely defined by how well it recovers from failure. It is defined by how rarely it asks humans to interpret failure at all.

Dusk’s bet is simple, and uncomfortable. If failure never becomes something worth noticing, responsibility becomes easier to carry. Not because the system is perfect, but because it refuses to let imperfection harden into history.

That is not an exciting promise. It is a disciplined one.

And discipline, in infrastructure, is often invisible until it is missing.