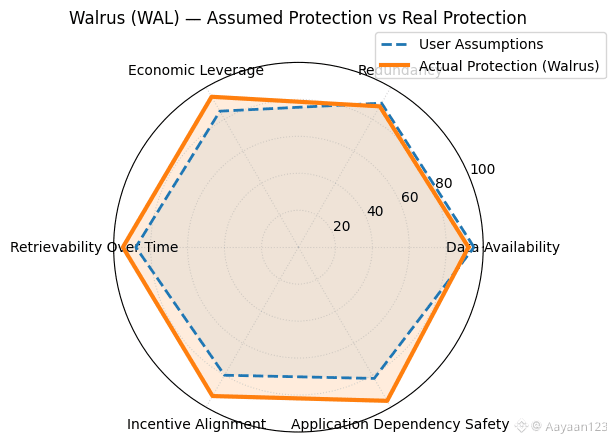

Most users move through crypto with a quiet set of assumptions. If something is decentralized, it must be protected. If data is on-chain, it must be safe. If access is restricted at the app level, it must be enforced everywhere else. These assumptions aren’t careless they’re inherited. Years of tooling trained people to trust that the layers beneath an application are doing more work than they actually are.

Most users move through crypto with a quiet set of assumptions. If something is decentralized, it must be protected. If data is on-chain, it must be safe. If access is restricted at the app level, it must be enforced everywhere else. These assumptions aren’t careless they’re inherited. Years of tooling trained people to trust that the layers beneath an application are doing more work than they actually are.

The gap between what users assume is protected and what truly is protected usually doesn’t show up as a failure. Nothing crashes. Nothing leaks all at once. Instead, it shows up later, when behavior changes in ways no one planned for. Data that was “meant” to be private turns out to be accessible in indirect ways. Permissions that felt solid dissolve once an app evolves or a dependency changes. Control exists, but only where someone remembered to implement it.

That’s the uncomfortable part. Protection often lives in the wrong place.

In many systems, data is technically stored safely, but its boundaries are vague. Access rules are enforced by applications rather than the storage layer itself. That means protection depends on every app getting it right, every time. When things are simple, that works. As systems grow, it becomes fragile. One missed check, one reused dataset, one assumption carried too far and suddenly the guarantees users thought they had were never really there.

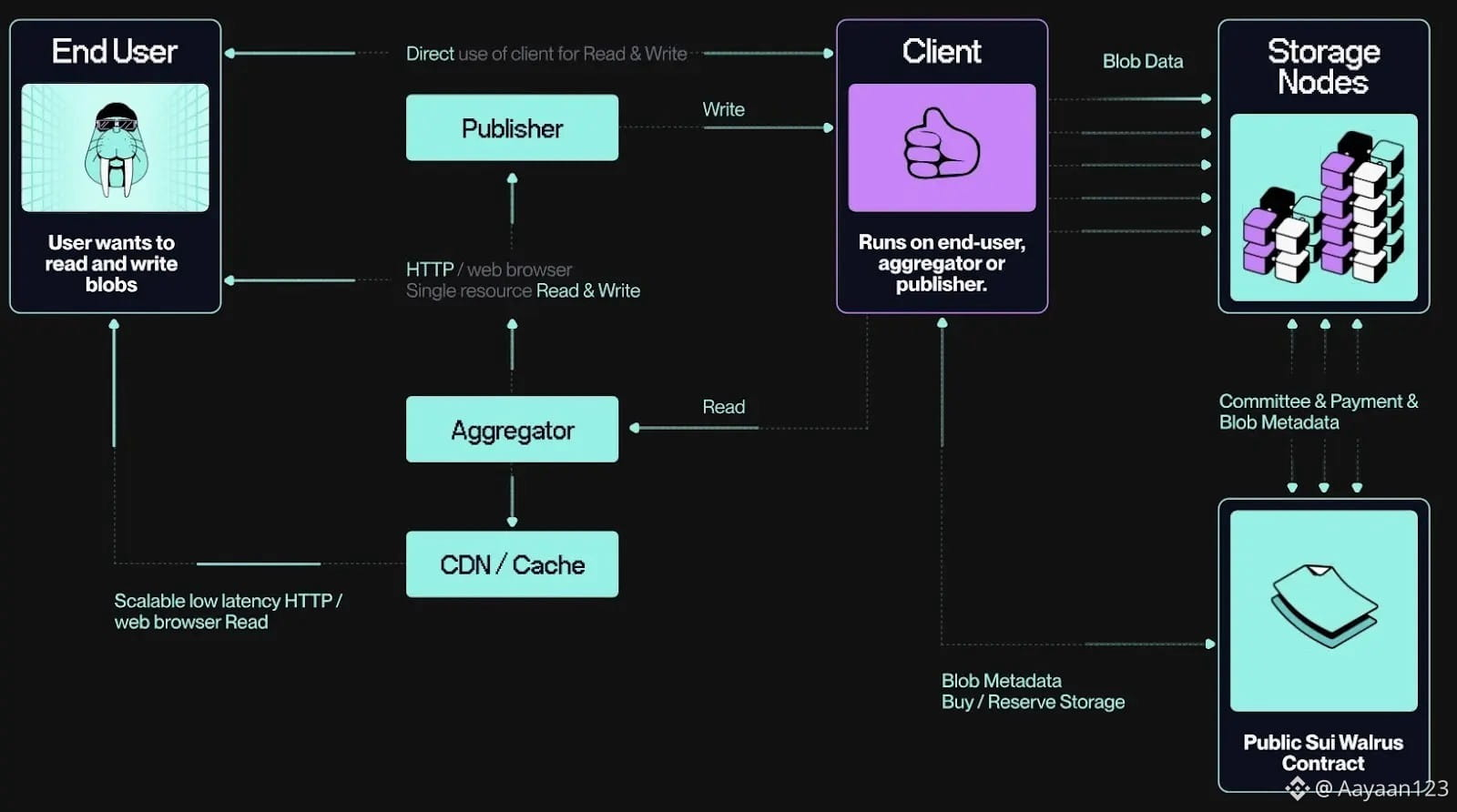

Walrus approaches this problem from a lower level. Instead of assuming data should be open unless guarded elsewhere, it treats access as part of the data’s identity. Who can read, write, or reference something isn’t a suggestion enforced by convention. It’s a rule enforced by the system itself. That shift changes the meaning of “protected.” Protection stops being something layered on top and starts being something embedded.

What’s striking is how rarely users articulate this difference, even when they feel it. They’ll say an app feels more stable. That features behave consistently. That things don’t quietly change behind the scenes. They won’t say it’s because access control moved closer to the data. But that’s usually the reason. When boundaries are enforced at the foundation, fewer assumptions leak upward.

This also clarifies a misconception users often carry: decentralization alone doesn’t guarantee protection. A system can be decentralized and still expose more than people expect. Data can be distributed and still be easy to misuse. Protection isn’t about where data lives it’s about how it’s governed once it’s there.

Walrus doesn’t promise that everything is hidden or locked down. That would be unrealistic. What it does is make the rules explicit. If something is protected, it’s protected by design, not by habit. If something is shared, it’s shared deliberately. That clarity matters because it removes the gray area where most surprises happen.

The difference between assumed protection and actual protection becomes more important as systems mature. Early users forgive ambiguity. Long-term users don’t. Builders can move fast when stakes are low. They can’t when data becomes part of real workflows, real coordination, and real value. At that stage, guessing where protection begins and ends is no longer acceptable.

Walrus sits in that transition. It doesn’t try to educate users with warnings or slogans. It changes the defaults quietly. Over time, that’s what reshapes expectations. People stop assuming protection exists somewhere else. They start trusting what the system actually enforces.

And that’s the moment the gap finally closes not because users learned more, but because the infrastructure stopped asking them to assume.