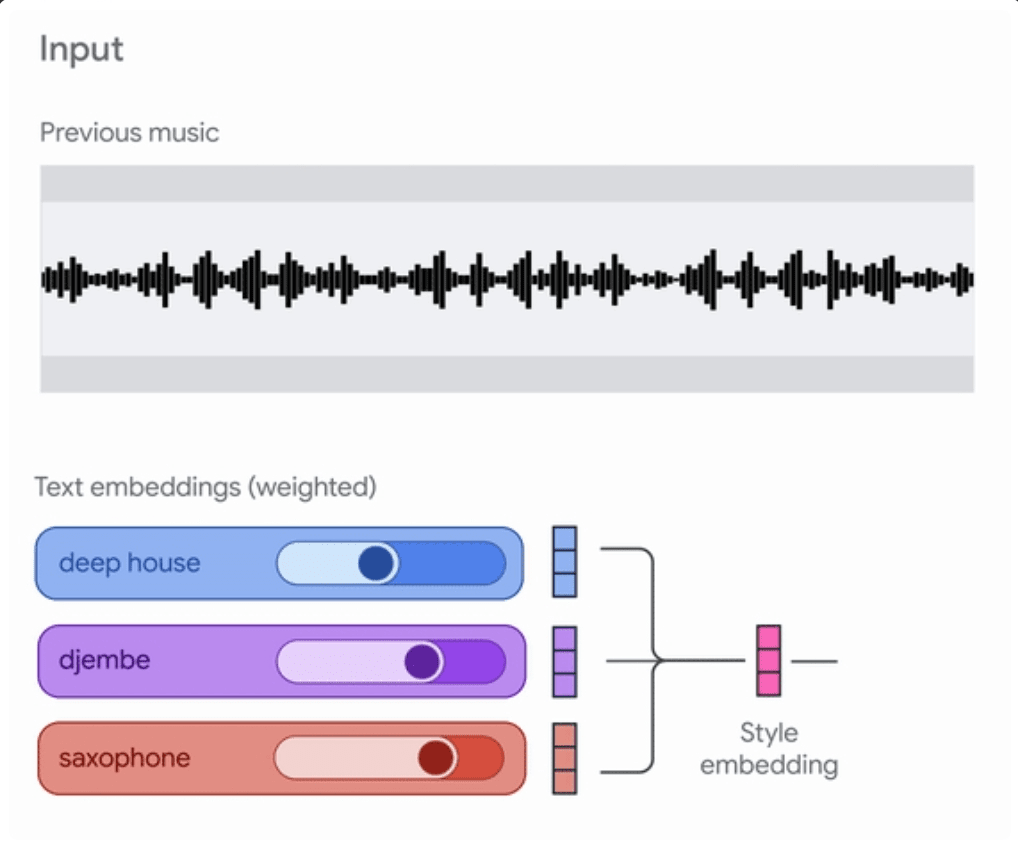

Google launched Magenta RealTime — a new neural network for creating music in real-time. Instead of generating entire tracks, the model creates music 2 seconds at a time, which allows it to be used even on low-end hardware.

The model is trained on 190,000 hours of instrumental music from open sources (without vocals) and contains 800 million parameters. It analyzes the last 10 seconds of the generated melody, ensuring musical coherence. Generating one fragment takes about 1.25 seconds when running in Google Colab (free tier).

Magenta RealTime is an evolution of the Magenta project, which began in 2016 as a study of AI creativity. The first experiments with generating music in the style of Bach and jazz emerged then. The new approach makes the process interactive and accessible: users can guide the creation of the composition as it is generated.

The source code is published on GitHub, the model is available on Hugging Face, and the demo works in Google Colab.

Meanwhile, the cryptocurrency #WCT continues its journey in the #BTC110KToday? $WCT .

@WalletConnect what awaits us next? :)