Somewhere in the exponential curve of artificial intelligence development, between GPT-3 and the models that followed, the technology industry confronted an uncomfortable truth: we were running out of places to put everything. Not storage capacity in the abstract sense, data centers could always add more drives, but economically viable, reliably accessible, verifiably authentic storage for the tsunami of training data, inference results, model weights, and generated content that AI systems were producing at volumes that made previous data explosions look quaint.

The problem wasn't just size, though that mattered enormously. It was the intersection of size, cost, verification, and governance. When an AI model trains on billions of data points, someone needs to prove that training data actually exists and hasn't been tampered with. When generated content floods the internet, distinguishing authentic sources from hallucinated or manipulated versions becomes existential for any system built on trust. When data markets emerge around proprietary datasets and model outputs, participants need infrastructure guaranteeing what they're buying actually matches what they're getting, and will remain accessible when needed.

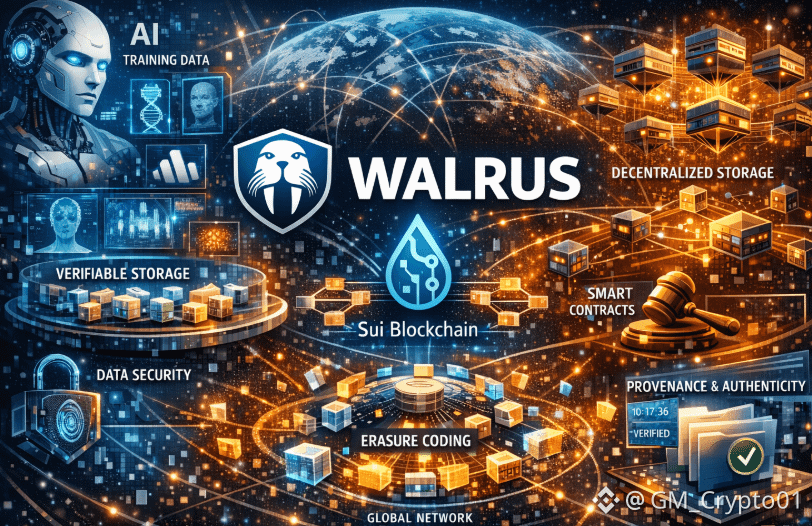

Walrus emerged as an answer to this convergence of challenges, though understanding why requires appreciating how fundamentally different decentralized storage is from the mental model most people carry around about "the cloud." Traditional cloud storage is conceptually simple: your data lives on someone else's computer, replicated a few times for redundancy, accessible as long as you keep paying Amazon or Google or Microsoft. It works beautifully until it doesn't, until a single company decides your content violates terms of service, until geopolitical considerations make certain data inaccessible in certain regions, until the economics of storing petabytes for decades becomes prohibitive for all but the largest enterprises.

What makes Walrus architecturally interesting is how it inverts traditional storage assumptions. Instead of storing complete copies of data on multiple servers, which scales linearly with cost, Walrus employs advanced erasure coding that can reconstruct entire files from partial fragments. Think of it like a sophisticated puzzle where you only need 60% of the pieces to see the complete picture. This means data can be distributed across a global network of storage nodes where even if 40% disappear, malfunction, or turn malicious, the original blob remains perfectly retrievable.

The economics of this approach are startling when considered at scale. Traditional replication might store three complete copies for redundancy, meaning 300% overhead. Walrus achieves similar or better fault tolerance at approximately 500% of the original data size, but distributed across many nodes rather than concentrated in a few locations. For massive datasets measured in petabytes or exabytes, that difference between 3x and 5x cost might seem counterintuitive until you realize the robustness trade-off: traditional replication fails catastrophically if all three locations experience correlated failures, while Walrus's distributed erasure coding remains resilient even with massive node losses.

The integration with Sui blockchain elevates this from interesting distributed systems engineering to genuinely novel infrastructure. Storage space becomes a tradeable resource, a digital asset that can be owned, split, combined, transferred like any other property. Stored blobs themselves become Sui objects with queryable metadata, enabling smart contracts to verify whether specific data exists, remains available, or needs lifecycle management. This composability unlocks use cases impossible in traditional storage paradigms.

Consider the emerging market for AI training datasets. A research institution might develop a uniquely valuable corpus of medical imaging data, properly anonymized and formatted for machine learning. In the Web2 world, monetizing this requires complex licensing agreements, ongoing infrastructure costs for hosting, and essentially trusting that buyers won't just copy everything and disappear. With Walrus, that dataset becomes a verifiable on-chain object. Smart contracts can enforce access rights, prove authenticity, automatically handle payments, and guarantee the data remains available for the contracted period without requiring the original institution to maintain expensive always-on infrastructure.

The WAL token's role in this ecosystem extends beyond simple payment rails. By tying storage node selection to delegated proof-of-stake, Walrus creates economic alignment between token holders and network quality. Storage nodes with more delegated stake earn more rewards, but only if they actually perform their duties reliably. Token holders have incentive to delegate to competent, trustworthy operators because poor performance means reduced rewards. This creates a market-based quality mechanism where the storage network's reliability emerges from economic incentives rather than centralized control.

The epoch-based committee rotation prevents ossification while maintaining stability. Storage nodes can't become entrenched gatekeepers because committee membership refreshes periodically based on stake. But the transitions are managed smoothly enough that data availability isn't disrupted. It's a delicate balance between decentralization's resilience and operational continuity that many blockchain projects struggle to achieve, often sacrificing one for the other.

What makes Walrus particularly well-positioned for the AI era is how it addresses data provenance and authenticity. When large language models generate content, when deepfakes become indistinguishable from reality, when synthetic data trains the next generation of AI systems, the ability to cryptographically prove the origin, timestamp, and integrity of data becomes crucial infrastructure. Walrus doesn't just store blobs, it creates an immutable record of what was stored, when, and by whom. That audit trail becomes increasingly valuable as AI-generated content proliferates and questions of authenticity dominate information environments.

The flexible access model acknowledges a practical reality that crypto purists often resist: most developers and users don't want to interact directly with blockchain complexity. By supporting traditional HTTP interfaces alongside native CLI and SDK options, Walrus meets users where they are. A content delivery network can cache frequently accessed Walrus blobs, serving them with the performance characteristics users expect from Web2 infrastructure, while the underlying storage remains verifiably decentralized. This pragmatism about user experience, combined with uncompromising decentralization in the core protocol, represents a maturation in how blockchain infrastructure gets built.

The technical sophistication of fast linear fountain codes augmented for Byzantine fault tolerance might sound academic, but it solves a fundamental problem in distributed systems: how do you efficiently reconstruct data from an untrusted network where some nodes might actively try to deceive you? Traditional error correction assumes honest failures, hardware breaking or connections dropping. Byzantine fault tolerance assumes adversaries, nodes deliberately sending corrupted data to undermine the system. Combining these properties efficiently required genuine innovation in coding theory and distributed systems design.

As data markets emerge and mature, as AI training becomes increasingly dependent on vast, diverse, verifiable datasets, as regulatory requirements around data retention and authenticity intensify, the infrastructure supporting these needs becomes critical rather than optional. Walrus positions itself at this intersection, not as a speculative bet on decentralization ideology, but as practical infrastructure solving real problems that traditional cloud storage can't adequately address.

The protocol's focus on unstructured data proves particularly apt for AI applications. Images, audio, video, raw text, sensor readings, these formats dominate AI training and inference workflows, and they're exactly what Walrus handles efficiently. Structured database operations remain better served by other technologies, but for the blob storage that increasingly dominates data growth curves, Walrus offers a compelling alternative to centralized providers.

Whether Walrus captures significant market share from established cloud storage providers remains to be seen. Inertia favors incumbents, and AWS or Google Cloud aren't standing still. But the unique properties that decentralized storage enables, the verifiability, the censorship resistance, the market-based quality assurance, the composability with smart contracts, these aren't features traditional providers can easily replicate without fundamentally restructuring their business models. For applications where those properties matter, where they're not nice to have but essential, Walrus isn't competing on cost or convenience, it's offering capabilities that simply don't exist elsewhere. And in an AI-driven future where data authenticity and availability become ever more critical, that positioning might prove prescient.